An interesting project related to the task queue processing come to the company I work for. It was previously developed by another team. We needed to detect and resolve issues that occurred at high load on the queue.

In short, the project consisted of several databases and applications located on different servers. A ‘Task’ in the given project is a stored procedure or a .NET application. Correspondingly, the ‘task’ must be performed on a certain database and on a certain server.

All queue-related data is stored on the dedicated server. As for the servers at which tasks must be performed, they store only metadata. That is, procedures, functions, and service data related to this server. All task-related data comes from a Linked Server.

Why is it so?

- Convenience. We can anytime specify that now server B stores data.

- It was implemented before us.

There are two most popular approaches to the queue processing:

- Sending notification about the queue existence to the task engine.

- Polling a queue for the task existence.

Initially, the second variant was implemented in the project. To minimize the wait time, our application polls a queue every 100-500ms.

Actually, there is nothing bad in it, except one thing – at such implementation, a table is blocked for an extra time. Leaping ahead, I can tell that row block with read of unblocked rows takes place in the query.

READPAST, ROWLOCK, UPDLOCK

So, let’s go back to the problem. During analysis, I noticed the batch requests/sec counter value in Active Monitor. This value at small amount (about 50) of tasks in the queue, exceeded 1000, and CPU load was rising steeply.

The first thought was that I needed to switch to implementation of the first variant (sending notification to the task engine). This method was implemented with usage of Service Blocker and SignalR:

- Serive Blocker was used for sending notification about a new task;

- SignalR was used for sending notification to the task engine.

Why SignalR?

This tool was already being used in the project, I was short of time, and that is why, I decided not to implement similar tool, for example, NserviceBus.

I was really amazed that this solution did not help. Yes, performance has been improved, but it did eliminate the problem completely. I wrote a stress test for debugging that added more than 500 tasks to the queue.

Creation of such stress test allowed me to detect the root of all evil.

Analysis of the list of active queries and productivity reports during high load showed the presence of ‘very interesting queries’ that consisted of a single command:

fetch api_cursor0000000000000003

Further analysis showed that these are queries with LinkedServer. A question arose: Does a query, like select * from RemoteServer.RemoteDatabase.dbo.RemoteTable where FieldId = @Value result in a query (fetch api_cursor0000000000000003) on RemoteServer? It turns out that yes, it does, even when Linked Server is MS SQL.

For more dramatic example, let’s create the ‘Test’ table (code of the table creation is available in the appendix to the article) on server A, and then execute a query on server B:

select * from dev2.test_db.dbo.test

where dev2 is server A.

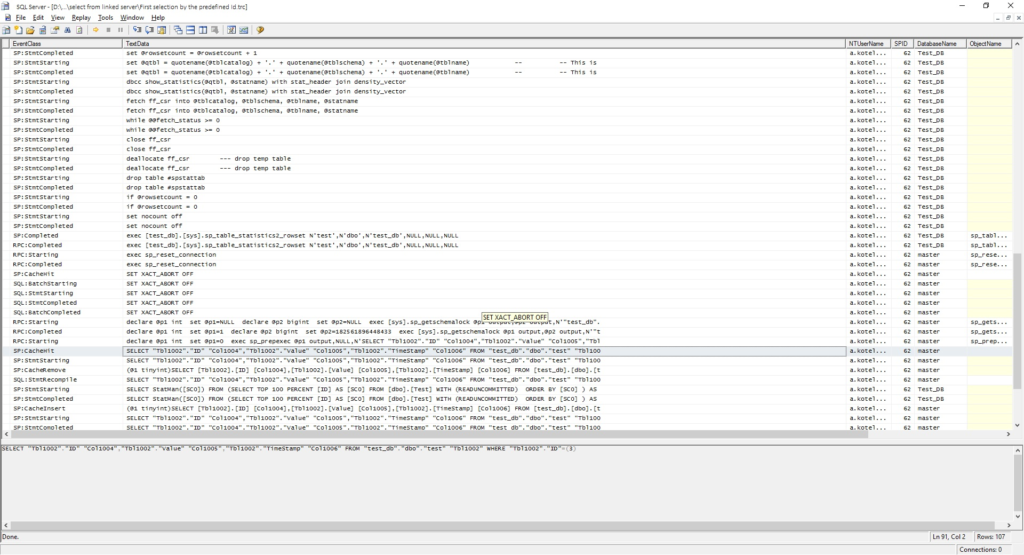

At first execution of the query, we will have a similar log in profiler on server A:

The complete log is located here.

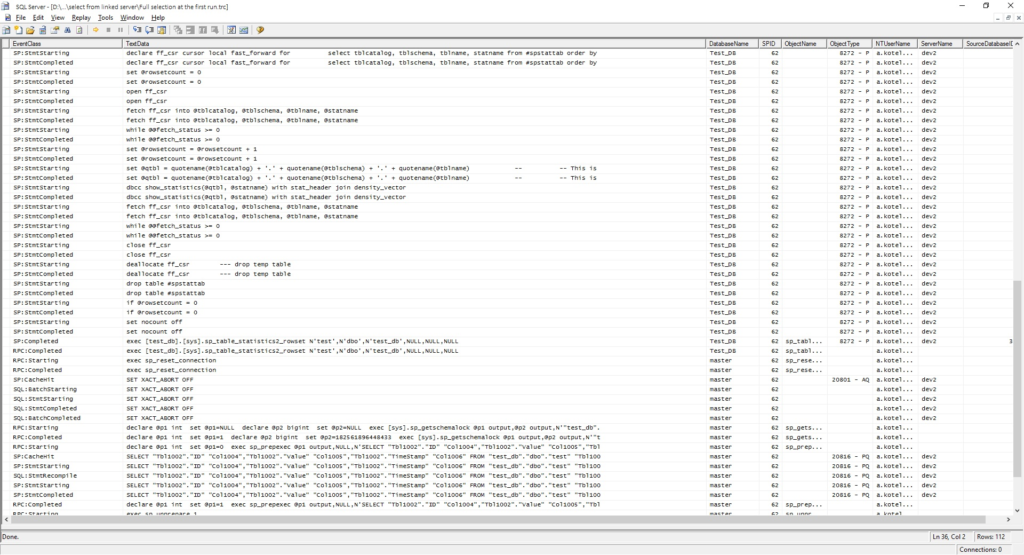

Now, let’s execute queries by ID:

select * from dev2.test_db.dbo.test where ID = 3

The complete log is available here.

As you can see from the screen shot, the query plan has been added to cache. If we execute the query for a second time, the situation will look slightly better.

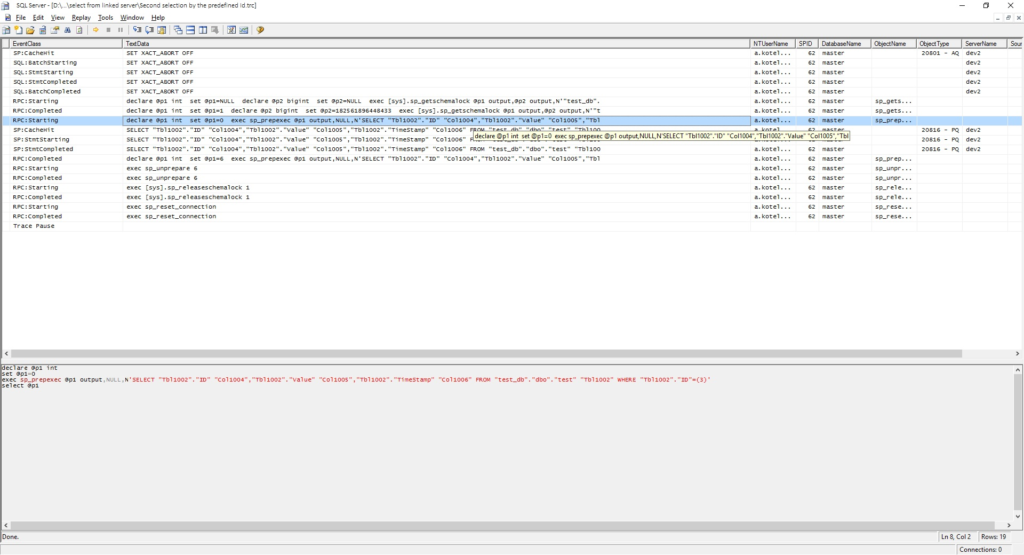

The complete log is available here.

As you can see, data is retrieved from cache this time.

When changing conditions, we will get the same selection – the first selection by the predefined Id. But the thing is that at large amount of different queries, cache becomes overloaded. SQL begins creating a bunch of queries to the table, what results in slowdowns. You might ask: What about indexes? There are indexes, but even queries with conditions by Primary Key (PK) resulted in the same problem.

What can we find in Google about it? Many things, but nothing useful:

Queries must be executed by a user who has one of the following roles: sysadmin, db_owner, db_ddladmin, in order to use statistics.

Linked Server could be setup incorrectly.

I found only a few useful articles:

As far as I understood, it is impossible to setup Linked Server in a way, so that is always used the Pull technology for retrieving data from Linked Server. It all depends on where you process the query.

I was running out of time, and the only solution that could save us was to rewrite a part of queries into dynamic SQL. i.e. to execute queries on the server that stores data.

You can work with data on Linked Server in several ways:

- Direct specification of remote server as a data source. This implementation has several cons:

- low productivity;

- it returns large volume of data.

select * from RemoteServer.RemoteDatabase.dbo.RemoteTable where Id = @Id

- Usage of OPENQUERY. It does not suit us because of several reasons:

- it makes specification of the remote server names a parameter impossible;

- it makes passing parameters to a query impossible;

- there are problems described in the Dynamic T-SQL and Benefits of its Usage article.

select * from OPENQUERY(RemoteServer, 'select * from RemoteDatabase.dbo.RemoteTable')

The following queries are executed on server B, and logs are stored on server A. Click the links under the queries to view examples of the query logs.

select * from OPENQUERY(dev2, 'select * from test_db.dbo.test') where id = 26

select * from OPENQUERY(dev2, 'select * from test_db.dbo.test where ID = 26')

- Executing a query on the remote server. Similar to OPENQUERY:

- you cannot indicate server name as a parameter, since name is setup at the stage of the procedure compilation;

- there are problems described in the Dynamic T-SQL and Benefits of its Usage article.

exec ('select * from RemoteDatabase.dbo.RemoteTable') at RemoteServerClick the links to view examples of logs for the following queries:

exec ('select * from test_db.dbo.test') at dev2exec ('select * from test_db.dbo.test where Id = 30') at dev2 - Executing the query on the remote server by executing the sp_executesql procedure.

DECLARE @C_SP_CMD nvarchar(50) = QUOTENAME(@RemoteServer) + N'.'+@RemoteDatabase +N'.sys.sp_executesql' DECLARE @C_SQL_CMD nvarchar(4000) = 'select * from dbo.RemoteTable' EXEC @C_SP_CMD @C_SQL_CMD

Click the following links to view examples of logs with usage of sp_executesql:

We have used the fourth method for solving the problem.

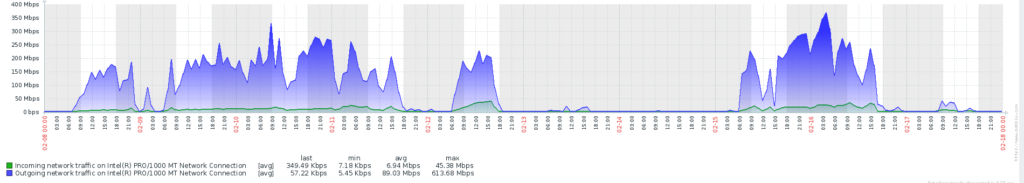

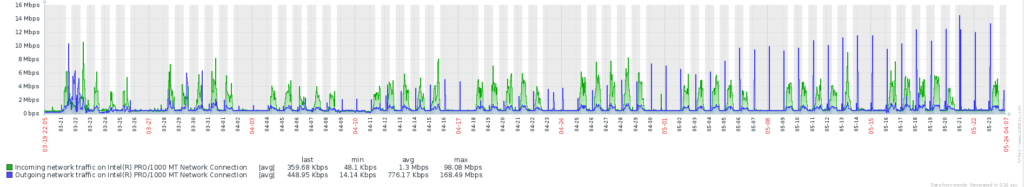

Below are several charts showing incoming and outgoing traffic on the server where the basic queue database is located before and after usage of sp_executesql. The database size is 200-300 Mb.

Incoming and outgoing traffic for several days on the server before usage of sp_executesql

Incoming and outgoing traffic for several days on the server after usage of sp_executesql

Outgoing peaks represent backup copying on NFS.

Conclusion

Initially, a driver from MS for working with MS SQL linked server is unable to execute queries on the source data server on its own. Correspondingly, we should try executing them on data source for, at least, partial solution of the performance-related problems.

Files related to the article.

Tags: dynamic sql, linked server, sql server Last modified: February 24, 2023