Database monitoring is the most essential job of any database administrator. Big organizations and companies have multiple database servers that are located either in the same data center or in the geographically different data centers. There are many standard tools for database monitoring. Those tools use the SQL Server dynamic management views and system stored procedure to populate the data. Using these DMVs, we can create our customized automated system to populate the status of the database and email the report.

In this article, I am going to demonstrate how we can use the system stored procedure and linked server to populate the information of databases located on different servers and schedule the job to send the report.

In this demo, I am going to perform the following tasks:

- Create required stored procedures on TTI609-VM1, TTI609-VM2, and TTI412-VM servers to populate information of the database, database objects, and SQL Jobs.

- Create a stored procedure to populate database summary, database object summary, and SQL Job summary from the TTI609-VM1 and TTI609-VM2 servers and store them in related tables.

- Create an SSIS package which performs the following tasks:

-

- Executes a stored procedure using Execute SQL Script Task.

- Export data from SQL tables created on TTI412-VM and store it in the individual tab of an excel file.

-

- Create an SQL Server Job to execute the SSIS package to populate the database information and stored procedure to email the report.

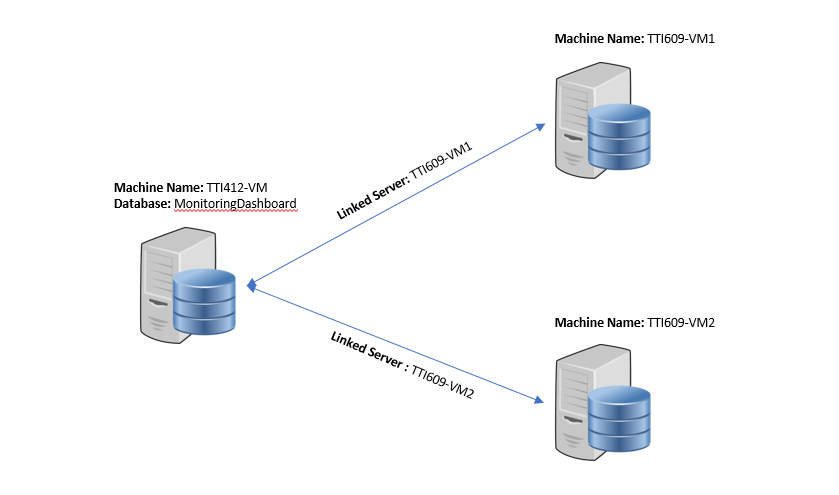

Following image illustrates the demo setup:

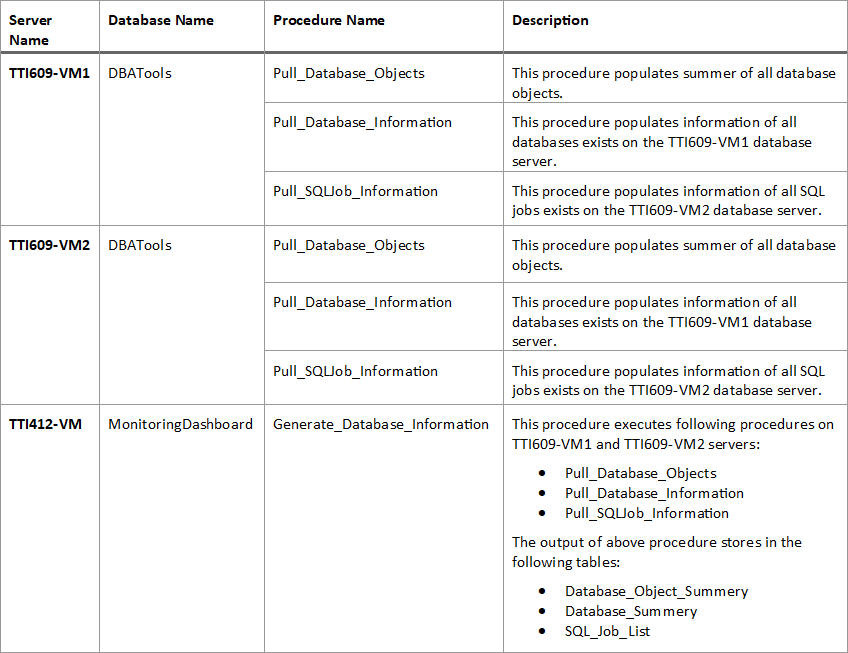

Following is the list of stored procedures:

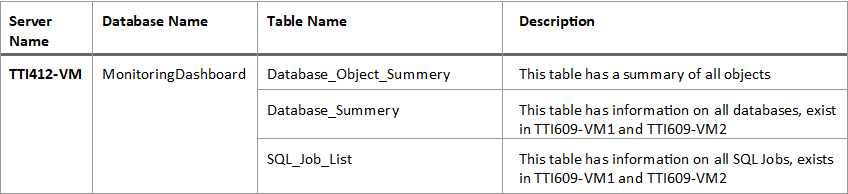

Following is the list of Tables:

Create Stored procedures on both database servers

As I mentioned, we are going to populate data from the TTI609-VM1 and TTI609-VM2 servers. The stored procedures used to populate the database will remain the same in both servers.

So firstly, I have created a database named DBATools on both servers. I created a stored procedure in those databases. To do that, execute the following code on the TTI609-VM1 and TTI609-VM2 servers:

USE [master] go /****** Object: Database [DBATools] Script Date: 10/25/2018 11:25:27 AM ******/ CREATE DATABASE [DBATools] containment = none ON PRIMARY ( NAME = N'DBATools', filename = N'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\DATA\DBATools.mdf' , size = 3264kb, maxsize = unlimited, filegrowth = 1024kb ) log ON ( NAME = N'DBATools_log', filename = N'C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\DATA\DBATools_log.ldf' , size = 816kb, maxsize = 2048gb, filegrowth = 10%) go

Create a stored procedure named Pull_Database_Information in the DBATools database. This stored procedure populates the following information of all databases existing on both database servers.

- Database Name.

- Database Compatibility Level.

- State of the database (ONLINE/OFFLINE/RESTORING/SUSPEND).

- Database recovery model (SIMPLE / FULL / BULK-LOGGED).

- Database Size in MB.

- Total Data File Size.

- Used Data File Size.

- Total Log File Size.

- Used Log File Size.

Execute the following code in the DBATools database of both database servers to create the stored procedure:

USE DBAtools

go

CREATE PROCEDURE Pull_Database_Information

AS

BEGIN

IF Object_id('tempdb.dbo.#DBSize') IS NOT NULL

DROP TABLE #dbsize

CREATE TABLE #dbsize

(

database_id INT PRIMARY KEY,

data_file_used_size DECIMAL(18, 2),

log_file_used_size DECIMAL(18, 2)

)

DECLARE @SQLCommand NVARCHAR(max)

SELECT @SQLCommand = Stuff((SELECT ' USE [' + d.NAME + '] INSERT INTO #DBSize (database_id, data_file_used_size, log_File_used_size) SELECT DB_ID() , SUM(CASE WHEN [type] = 0 THEN space_used END) , SUM(CASE WHEN [type] = 1 THEN space_used END) FROM ( SELECT s.[type], space_used = SUM(FILEPROPERTY(s.name, ''SpaceUsed'') * 8. / 1024) FROM sys.database_files s GROUP BY s.[type] ) t;'

FROM sys.databases d

WHERE d.[state] = 0

FOR xml path(''), type).value('.',

'NVARCHAR(MAX)'),

1, 2,

'')

EXEC sys.Sp_executesql

@SQLCommand

SELECT d.database_id AS 'Database ID',

d.NAME AS 'Database Name',

d.state_desc AS 'Database State',

d.recovery_model_desc AS 'Recovery Model',

t.total_db_size AS 'Database Size',

t.data_file_size AS 'Data File Size',

s.data_file_used_size AS 'Data File Used',

t.log_file_size AS 'Log file size',

s.log_file_used_size AS 'Log File Used'

FROM (SELECT database_id,

log_file_size = Cast(Sum(CASE

WHEN [type] = 1 THEN size

END) * 8. / 1024 AS DECIMAL(18, 2)

),

data_file_size = Cast(Sum(CASE

WHEN [type] = 0 THEN size

END) * 8. / 1024 AS

DECIMAL(18, 2))

,

total_DB_size = Cast(

Sum(size) * 8. / 1024 AS DECIMAL(18, 2))

FROM sys.master_files

GROUP BY database_id) t

JOIN sys.databases d

ON d.database_id = t.database_id

LEFT JOIN #dbsize s

ON d.database_id = s.database_id

ORDER BY t.total_db_size DESC

END

Secondly, create stored procedures named Pull_Database_Objects in the DBATools database. This stored procedure iterates through all the databases within both database servers and populates count of all database objects. It populates the following columns:

- Server / Host Name.

- Database Name.

- Type of database object (Table / Stored procedure / SQL Scaler functions / constraints etc…)

- Total number of database objects.

Execute following code in the DBATools database on both database servers to create the stored procedure:

USE dbatools go CREATE PROCEDURE [Pull_database_objects] AS BEGIN CREATE TABLE #finalsummery ( id INT IDENTITY (1, 1), databasename VARCHAR(350), objecttype VARCHAR(200), totalobjects INT ) DECLARE @SQLCommand NVARCHAR(max) DECLARE @I INT=0 DECLARE @DBName VARCHAR(350) DECLARE @DBCount INT CREATE TABLE #databases ( NAME VARCHAR(350) ) INSERT INTO #databases (NAME) SELECT NAME FROM sys.databases WHERE database_id > 4 AND NAME NOT IN ( 'ReportServer', 'reportservertempdb' ) SET @DBCount=(SELECT Count(*) FROM #databases) WHILE ( @DBCount > @I ) BEGIN SET @DBName=(SELECT TOP 1 NAME FROM #databases) SET @SQLCommand=' Insert Into #FinalSummery (DatabaseName,ObjectType,TotalObjects) Select ''' + @DBName + ''', Case when Type=''TR'' then ''SQL DML trigger'' when Type=''FN'' then ''SQL scalar function'' when Type=''D'' then ''DEFAULT (constraint or stand-alone)'' when Type=''PK'' then ''PRIMARY KEY constraint'' when Type=''P'' then ''SQL Stored Procedure'' when Type=''U'' then ''Table (user-defined)'' when Type=''V'' then ''View'' when Type=''X'' then ''Extended stored procedure'' End As ObjectType, Count(Name)TotalObjects from ' + @DBName + '.sys.all_objects group by type' EXEC Sp_executesql @SQLCommand DELETE FROM #databases WHERE NAME = @DBName SET @i=@i + 1 END SELECT Host_name() AS 'Server Name', databasename, objecttype, totalobjects, Getdate() AS 'ReportDate' FROM #finalsummery WHERE objecttype IS NOT NULL DROP TABLE #finalsummery END

Create stored procedures named Pull_SQLJob_Information in the DBATools database. This stored procedure iterates through all the database server and populates the information of all SQL jobs and their statuses. It populates the following columns:

- Server / Host Name.

- SQL Job Name.

- Owner of SQL Job.

- Job Category.

- Description of Job.

- Status of Job (Enabled / disabled)

- A date of job creation.

- Job Modified date.

- Job scheduled status.

- Schedule Name.

- Last execution date and time

- Last execution status.

Execute the following code in the DBATools database on both database servers to create the stored procedure:

CREATE PROCEDURE Pull_sqljob_information AS BEGIN SELECT Host_name() AS 'Server Name', a.NAME AS 'Job Name', d.NAME AS Owner, b.NAME AS Category, a.description AS Description, CASE a.enabled WHEN 1 THEN 'Yes' WHEN 0 THEN 'No' END AS 'IsEnabled', a.date_created AS CreatedDate, a.date_modified AS ModifiedDate, CASE WHEN f.schedule_uid IS NULL THEN 'No' ELSE 'Yes' END AS 'Scheduled?', f.NAME AS JobScheduleName, Max(Cast( Stuff(Stuff(Cast(g.run_date AS VARCHAR), 7, 0, '-'), 5, 0, '-') + ' ' + Stuff(Stuff(Replace(Str(g.run_time, 6, 0), ' ', '0'), 5, 0, ':'), 3, 0, ':') AS DATETIME)) AS [LastRun], CASE g.run_status WHEN 0 THEN 'Failed' WHEN 1 THEN 'Success' WHEN 2 THEN 'Retry' WHEN 3 THEN 'Canceled' WHEN 4 THEN 'In progress' END AS Status FROM msdb.dbo.sysjobs AS a INNER JOIN msdb.dbo.sysjobhistory g ON a.job_id = g.job_id LEFT JOIN msdb.dbo.syscategories AS b ON a.category_id = b.category_id LEFT JOIN msdb.dbo.sysjobsteps AS c ON a.job_id = c.job_id AND a.start_step_id = c.step_id LEFT JOIN msdb.sys.database_principals AS d ON a.owner_sid = d.sid LEFT JOIN msdb.dbo.sysjobschedules AS e ON a.job_id = e.job_id LEFT JOIN msdb.dbo.sysschedules AS f ON e.schedule_id = f.schedule_id GROUP BY a.NAME, d.NAME, b.NAME, a.description, a.enabled, f.schedule_uid, f.NAME, a.date_created, a.date_modified, g.run_status ORDER BY a.NAME END

Create Stored procedures, Linked Server and tables on the central server

Once the procedures have been created on the TTI609-VM1 and TTI609-VM2 database servers, create required procedures and tables on the central server (TTI412-VM).

I created a separate database named as MonitoringDashboard on the TTI412-VM server. Execute the following code to create a database on the central server.

USE [master] go /****** Object: Database [MonitoringDashboard] Script Date: 10/25/2018 2:44:09 PM ******/ CREATE DATABASE [MonitoringDashboard] containment = none ON PRIMARY ( NAME = N'MonitoringDashboard', filename = N'E:\MS_SQL\SQL2017_Data\MonitoringDashboard.mdf', size = 8192kb, maxsize = unlimited, filegrowth = 65536kb ) log ON ( NAME = N'MonitoringDashboard_log', filename = N'E:\MS_SQL\SQL2017_Log\MonitoringDashboard_log.ldf', size = 8192kb, maxsize = 2048gb, filegrowth = 65536kb ) go

Once the database has been created, create a stored procedure which uses LINKED Server to execute a procedure on the TTI609-VM1 and TTI609-VM2 database servers. Execute the following code in the “master” database of the TTI412-VM database server to create a linked server:

Script 1: Create Linked server TTI609-VM1

USE [master] go /****** Object: LinkedServer [TTI609-VM1] Script Date: 10/25/2018 2:49:28 PM ******/ EXEC master.dbo.Sp_addlinkedserver @server = N'TTI609-VM1', @srvproduct=N'SQL Server' /* For security reasons the linked server remote logins password is changed with ######## */ EXEC master.dbo.Sp_addlinkedsrvlogin @rmtsrvname=N'TTI609-VM1', @useself=N'False', @locallogin=NULL, @rmtuser=N'sa', @rmtpassword='########' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'collation compatible', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'data access', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'dist', @optvalue=N'false' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'rpc', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'rpc out', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'connect timeout', @optvalue=N'0' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'query timeout', @optvalue=N'0' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'use remote collation', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM1', @optname=N'remote proc transaction promotion', @optvalue=N'false' go

Script 2: Create Linked server TTI609-VM2

USE [master] go /****** Object: LinkedServer [TTI609-VM2] Script Date: 10/25/2018 2:55:29 PM ******/ EXEC master.dbo.Sp_addlinkedserver @server = N'TTI609-VM2', @srvproduct=N'SQL Server' /* For security reasons the linked server remote logins password is changed with ######## */ EXEC master.dbo.Sp_addlinkedsrvlogin @rmtsrvname=N'TTI609-VM2', @useself=N'False', @locallogin=NULL, @rmtuser=N'sa', @rmtpassword='########' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'collation compatible', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'data access', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'dist', @optvalue=N'false' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'rpc', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'rpc out', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'connect timeout', @optvalue=N'0' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'collation name', @optvalue=NULL go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'query timeout', @optvalue=N'0' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'use remote collation', @optvalue=N'true' go EXEC master.dbo.Sp_serveroption @server=N'TTI609-VM2', @optname=N'remote proc transaction promotion', @optvalue=N'false' go

Create a Stored procedure and tables

Once the linked server has been created, we need to create three tables named Database_Object_Summery, Database_Summery, and SQL_Job_List in the MonitoringDashboard database. These tables store information populated by the Generate_Database_Information stored procedure and later the data, stored in those tables that will be used to generate a report in excel.

Execute the following code to create the SQL_Job_List table:

USE [MonitoringDashboard] go CREATE TABLE [dbo].[sql_job_list] ( [id] [INT] IDENTITY(1, 1) NOT NULL, [servername] [VARCHAR](250) NULL, [jobname] [VARCHAR](250) NULL, [jobowner] [VARCHAR](250) NULL, [jobcategory] [VARCHAR](250) NULL, [jobdescription] [VARCHAR](250) NULL, [jobstatus] [VARCHAR](50) NULL, [createdate] [DATETIME] NULL, [modifieddate] [DATETIME] NULL, [isscheduled] [VARCHAR](5) NULL, [schedulename] [VARCHAR](250) NULL, [reportdate] [DATETIME] NULL ) ON [PRIMARY] go ALTER TABLE [dbo].[sql_job_list] ADD DEFAULT (Getdate()) FOR [ReportDate] go

Execute the following code to create the lst_dbservers table:

USE [MonitoringDashboard] go CREATE TABLE [dbo].[lst_dbservers] ( [id] [INT] IDENTITY(1, 1) NOT NULL, [servername] [VARCHAR](50) NOT NULL, [addeddate] [DATETIME] NOT NULL, PRIMARY KEY CLUSTERED ( [id] ASC )WITH (pad_index = OFF, statistics_norecompute = OFF, ignore_dup_key = OFF, allow_row_locks = on, allow_page_locks = on) ON [PRIMARY], UNIQUE NONCLUSTERED ( [servername] ASC )WITH (pad_index = OFF, statistics_norecompute = OFF, ignore_dup_key = OFF, allow_row_locks = on, allow_page_locks = on) ON [PRIMARY] ) ON [PRIMARY] go ALTER TABLE [dbo].[lst_dbservers] ADD DEFAULT (Getdate()) FOR [AddedDate] go

Execute the following code to create the Database_Summery table:

USE [MonitoringDashboard] go CREATE TABLE [dbo].[database_summery] ( [id] [INT] IDENTITY(1, 1) NOT NULL, [servername] [VARCHAR](150) NULL, [databaseid] [INT] NULL, [databasename] [VARCHAR](250) NULL, [databasestatus] [VARCHAR](50) NULL, [recoverymodel] [VARCHAR](50) NULL, [compatibilitylevel] [INT] NULL, [databasecreatedate] [DATE] NULL, [databasecreatedby] [VARCHAR](150) NULL, [dbsize] [NUMERIC](10, 2) NULL, [datafilesize] [NUMERIC](10, 2) NULL, [datafileused] [NUMERIC](10, 2) NULL, [logfilesize] [NUMERIC](10, 2) NULL, [logfileused] [NUMERIC](10, 2) NULL, [reportdate] [DATETIME] NULL ) ON [PRIMARY] go ALTER TABLE [dbo].[database_summery] ADD DEFAULT (Getdate()) FOR [ReportDate] go

Execute the following code to create the Database_Object_Summery table:

USE [MonitoringDashboard] go CREATE TABLE [dbo].[database_object_summery] ( [id] [INT] IDENTITY(1, 1) NOT NULL, [servername] [VARCHAR](250) NULL, [databasename] [VARCHAR](250) NULL, [objecttype] [VARCHAR](50) NULL, [objectcount] [INT] NULL, [reportdate] [DATETIME] NULL ) ON [PRIMARY] go ALTER TABLE [dbo].[database_object_summery] ADD DEFAULT (Getdate()) FOR [ReportDate] go

Once the tables are created, create a stored procedure named Generate_Database_Information on the MonitoringDashboard database. Using “RPC”, it executes stored procedures, created on the TTI609-VM1 and TTI609-VM2 database servers to populate the data.

Execute the following code to create a stored procedure:

Create PROCEDURE Generate_database_information AS BEGIN /*Cleanup*/ TRUNCATE TABLE database_object_summery TRUNCATE TABLE database_summery TRUNCATE TABLE sql_job_list DECLARE @ServerCount INT DECLARE @i INT =0 DECLARE @SQLCommand_Object_Summery NVARCHAR(max) DECLARE @SQLCommand_Database_Information NVARCHAR(max) DECLARE @SQLCommand_SQL_Job_Information NVARCHAR(max) DECLARE @servername VARCHAR(100) CREATE TABLE #db_server_list ( servername VARCHAR(100) ) INSERT INTO #db_server_list (servername) SELECT servername FROM lst_dbservers SET @ServerCount= (SELECT Count(servername) FROM #db_server_list) WHILE ( @ServerCount > @i ) BEGIN SET @servername=(SELECT TOP 1 servername FROM #db_server_list) SET @SQLCommand_Object_Summery = 'insert into Database_Object_Summery (ServerName,DatabaseName,ObjectType,ObjectCount,ReportDate) exec [' + @servername + '].DBATools.dbo.[Pull_Database_Objects]' SET @SQLCommand_Database_Information = 'insert into Database_Summery (ServerName,DatabaseID,DatabaseName,DatabaseStatus,Recoverymodel,CompatibilityLevel,DatabaseCreateDate,DatabaseCreatedBy,DBSize,DataFileSize,DataFileUsed,LogFileSize,LogFileUsed) exec [' + @servername + '].DBATools.dbo.[Pull_Database_Information]' SET @SQLCommand_SQL_Job_Information = 'insert into SQL_Job_List (ServerName,JobName,JobOwner,Jobcategory,JobDescription,JobStatus,CreateDate,ModifiedDate,IsScheduled,ScheduleName) exec [' + @servername + '].DBATools.dbo.[Pull_SQLJob_Information]' EXEC Sp_executesql @SQLCommand_Object_Summery EXEC Sp_executesql @SQLCommand_Database_Information EXEC Sp_executesql @SQLCommand_SQL_Job_Information DELETE FROM #db_server_list WHERE servername = @servername SET @I=@i + 1 END END

Once the procedure is created, create an SSIS package to export data into an Excel file.

Create SSIS Package to export data in excel file

In my past articles, I explained the steps to configure the data flow tasks, OLEDB Connections, and Excel connections, hence I skip this part.

To export data into an Excel file, Open SQL Server Data tools and create a new SSIS project named Export_Database_Information.

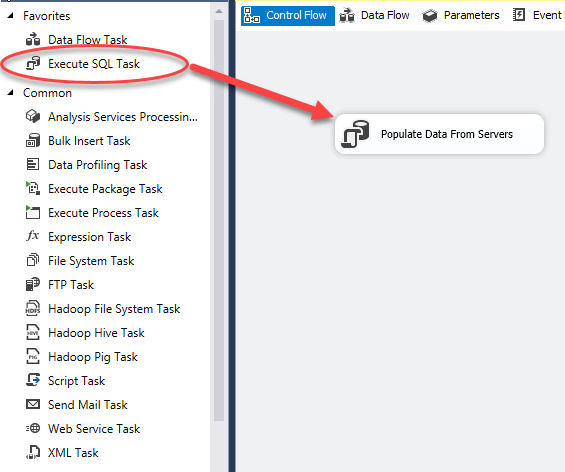

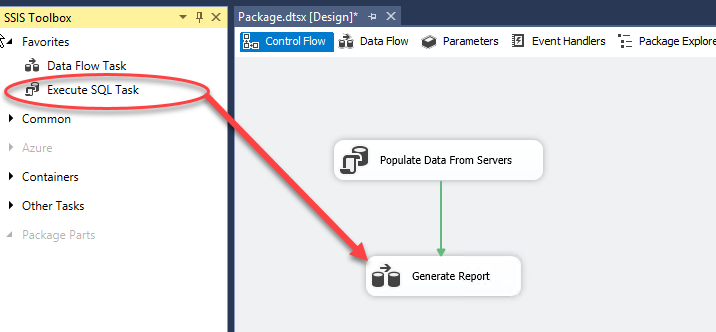

Once the project is created, drag and drop Execute SQL Task to the Control Flow window and rename it as Populate Data from Servers. See the following image:

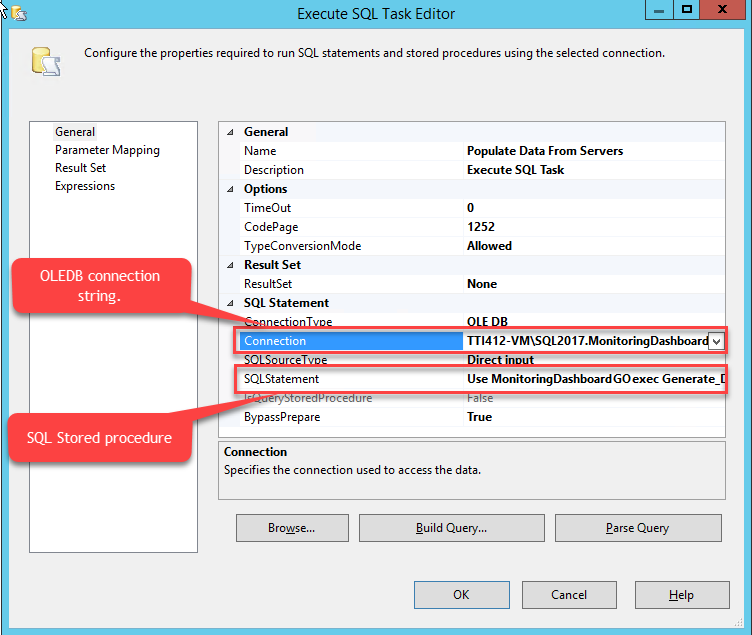

Double-click Execute SQL Task (Populate Data from Server). The Execute SQL Task Editor dialog box opens to configure SQL connection. See the following image:

In the Connection filed, select the OLEDB connection string and in the SQL Statement field, provide the following query:

USE monitoringdashboard go EXEC Generate_database_information

Click OK to close the dialog box.

On the Control Flow screen, drag and drop Data Flow Task from SSIS Toolbox and rename it as Generate Report. See the following image:

Double-click to open the Data Flow window.

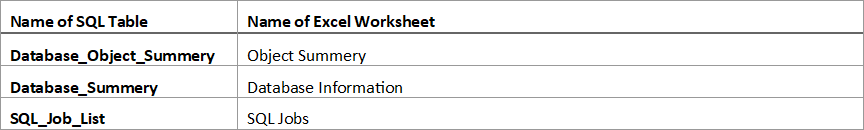

As I mentioned earlier, the Generate_Database_Information procedure inserts the output of the database servers in the following tables:

- Database_Object_Summery

- Database_Summery

- SQL_Job_List

I created an Excel file that has three worksheets. The following tables show the mapping of the SQL tables and Excel Worksheet.

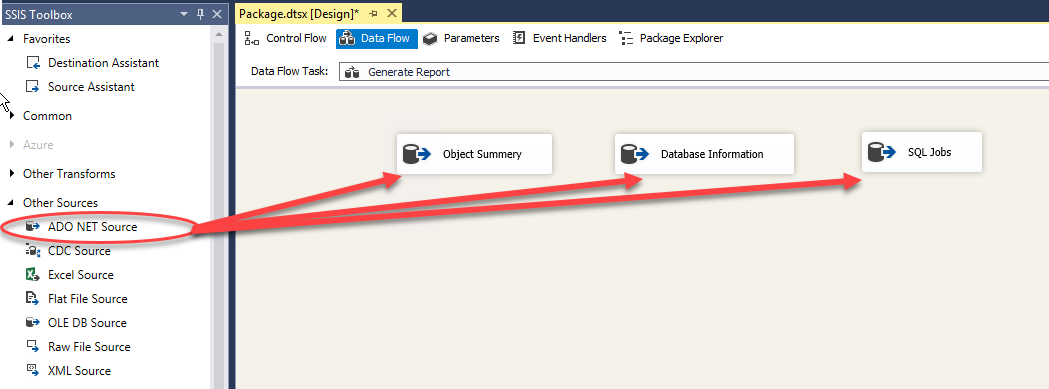

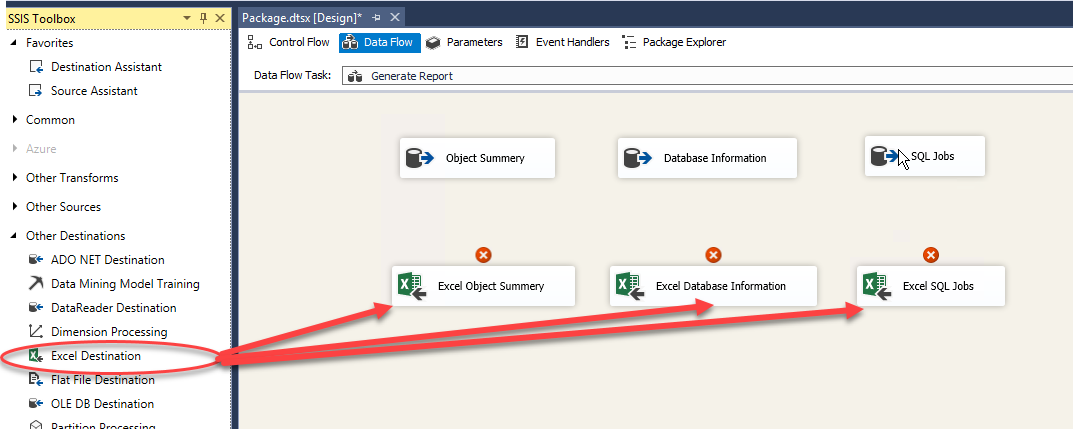

In the Data Flow window, drag and drop three ADO.Net Sources and three Excel destinations. See the following image:

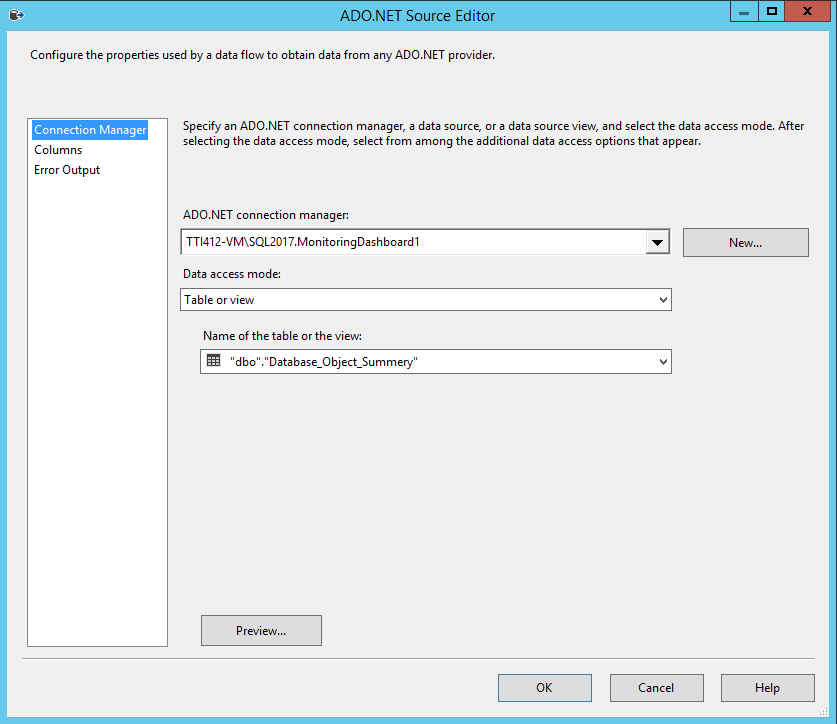

Double-click Object Summary (ADO.NET Source) in ADO.NET Source Editor.

- Select TTI412-VM\SQL2017MonitoringDashboard from the ADO.NET connection manager drop-down box.

- Select Table or View from the Data access mode drop-down box.

- Select Database_Object_Summery from Name of the table or the view drop-down box.

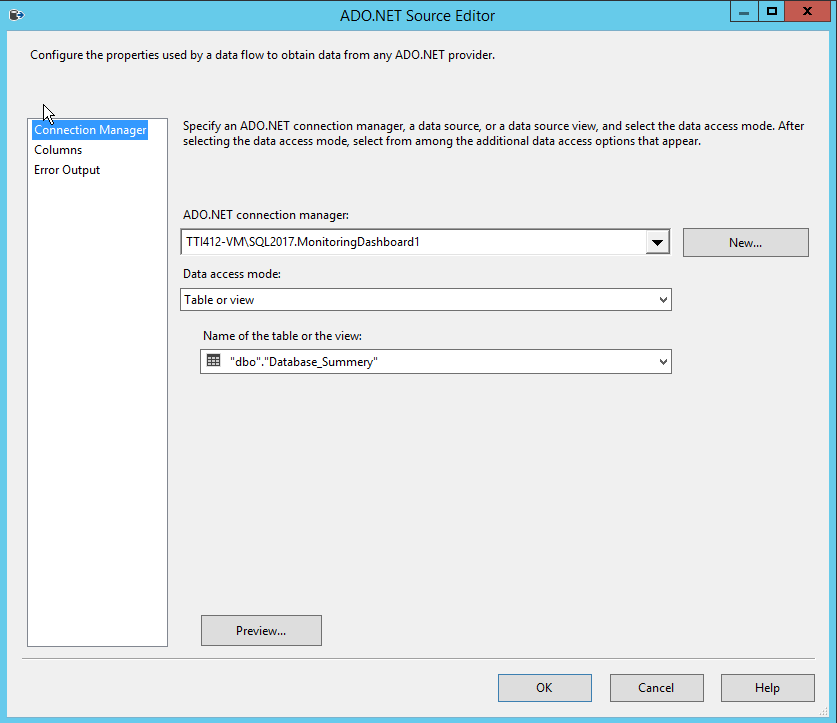

Double-click Database Information (ADO.NET Source) in ADO.NET Source Editor.

- Select “TTI412-VM\SQL2017MonitoringDashboard” from the ADO.NET connection manager drop-down box.

- Select Table or View from the Data access mode drop-down box.

- Select “Database_Summery” from the Name of the table or the view drop-down box.

Double-click SQL Jobs (ADO.NET Source) in ADO.NET Source Editor.

- Select TTI412-VM\SQL2017MonitoringDashboard in the ADO.NET connection manager.

- Select Table or View from the Data access mode drop-down box.

- Select SQL_Job_List in the Name of the table or the view drop-down box.

Now, drag and drop three Excel destinations from SSIS Toolbox. See the following image:

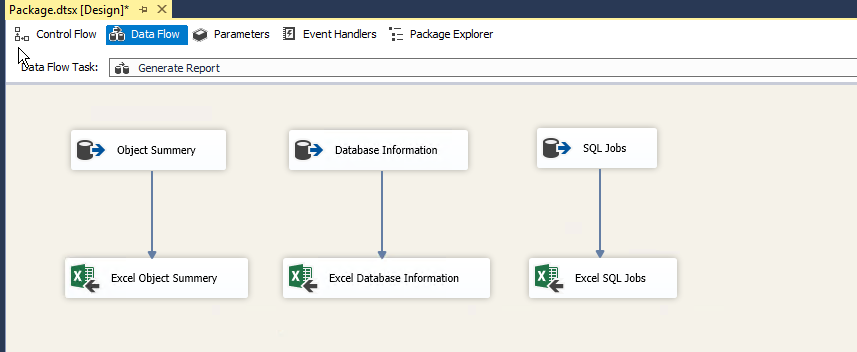

Once destinations have been copied, drag blue arrow beneath the ADO.Net source and drop it on the Excel destination. Do the same for all. See the following image:

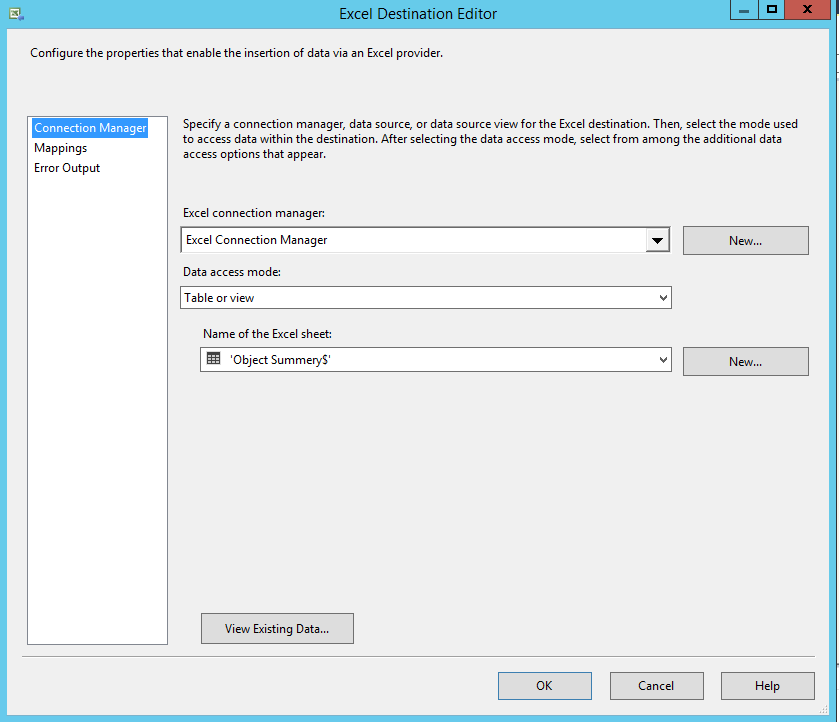

Double-click Excel Object Summary (ADO.NET Source) in Excel Destination Editor.

- Select Excel Connection Manager from the Excel connection manager drop-down box.

- Select Table or View from the Data access mode drop-down box.

- Select Object Summery$ from the Name of the excel sheet drop-down box.

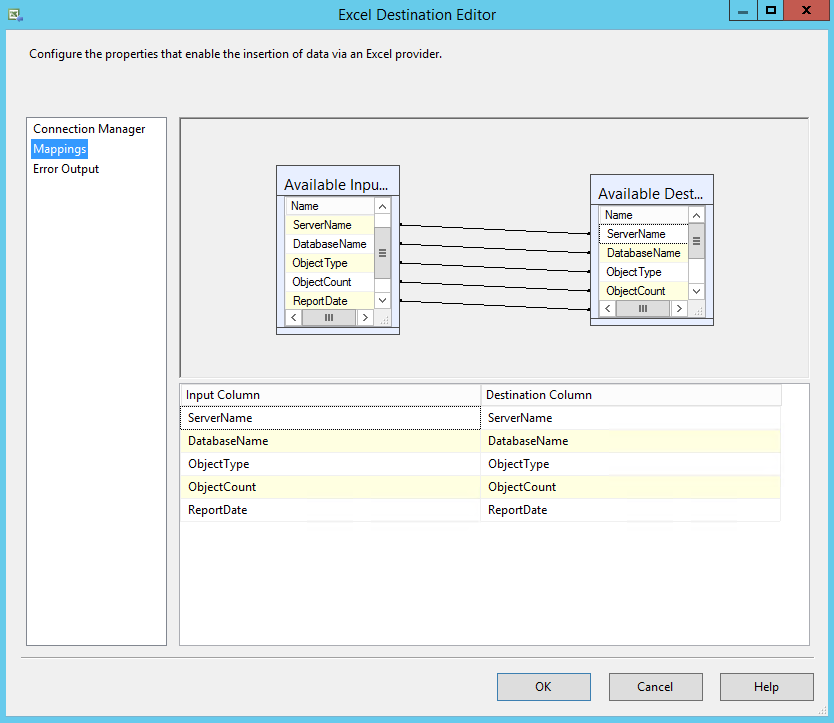

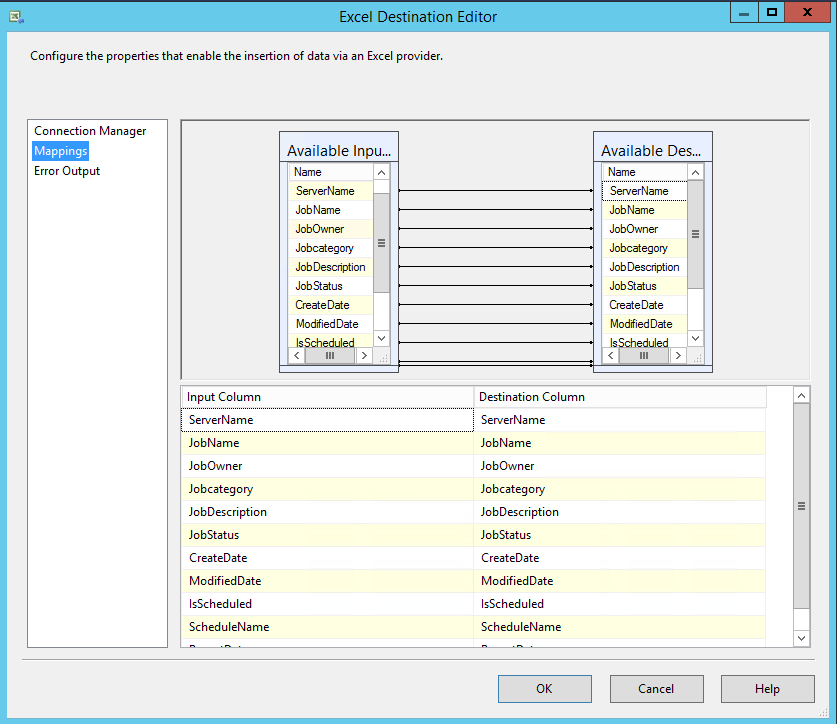

As I mentioned, column names of the SQL table and the Excel columns are same, hence mapping will be done automatically. Click Mapping to map the columns. See the following image:

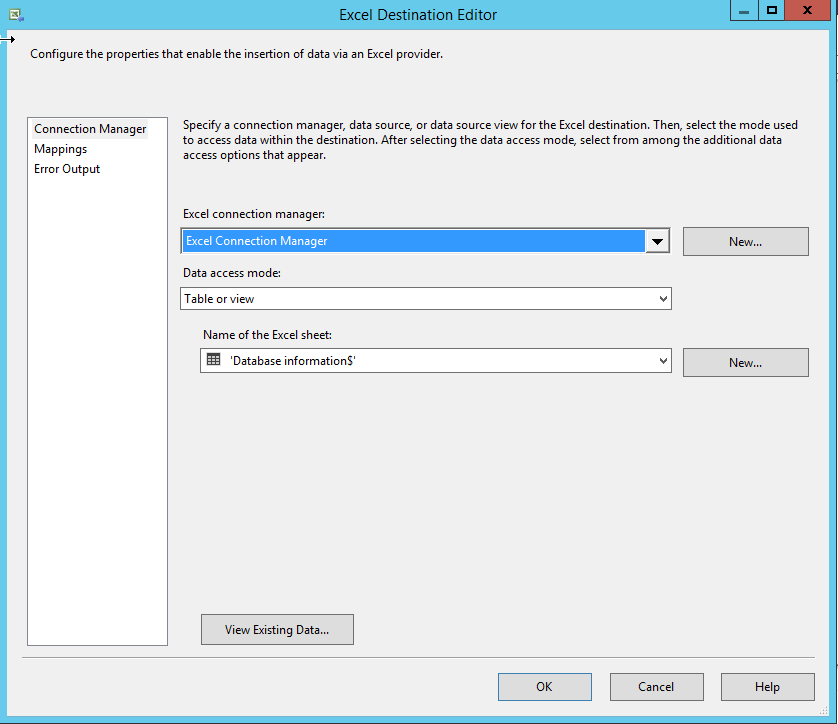

Double-click Excel Database Information (Excel Destination) in Excel Destination Editor.

- Select Excel Connection Manager from the Excel connection manager drop-down box.

- Select Table or View from the Data access mode drop-down box.

- Select Database information$ from the Name of the excel sheet drop-down box.

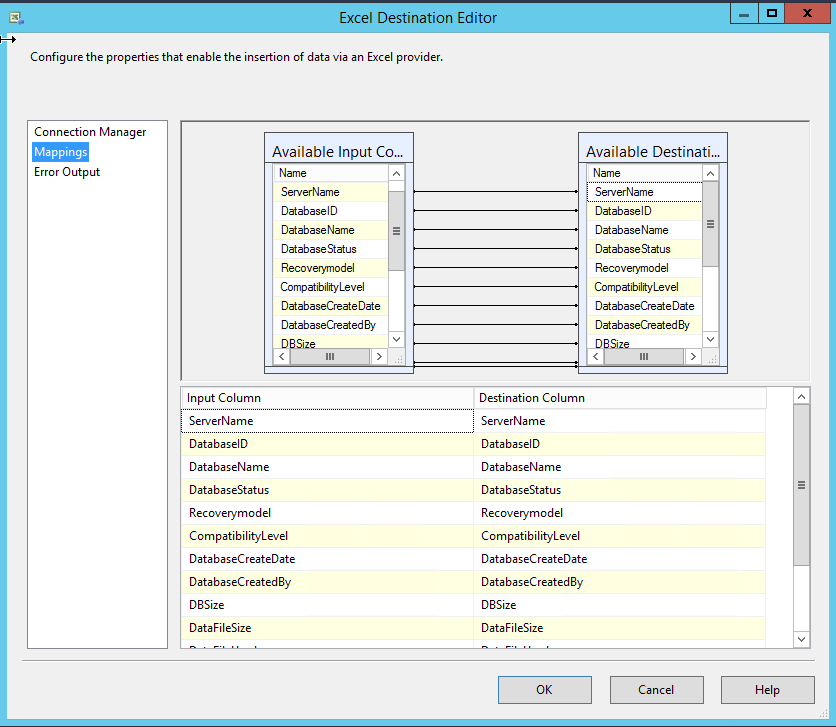

As I mentioned, column names of the SQL table and the Excel columns are same, hence mapping will be done automatically. Click Mapping to map the columns. See the following image:

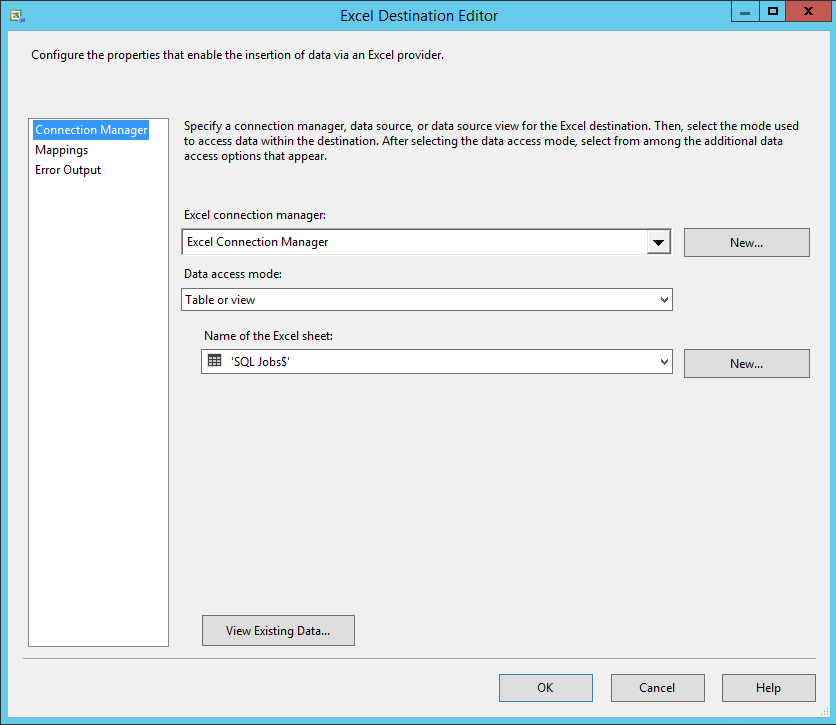

Double-click Excel SQL Jobs (Excel Destination) in Excel Destination Editor.

- Select Excel Connection Manager from the Excel connection manager drop-down box.

- Select Table or View from the Data access mode drop-down box.

- Select “SQL Jobs$ from the Name of the Excel sheet drop-down box.

As I mentioned, column names of the SQL table and the Excel columns are same, hence mapping will be done automatically. Click Mapping to map the columns. See the following image:

Create a SQL Job to email the database report

Once the package is created, create a SQL job to perform the following activities:

- Execute the SSIS package to populate data from all servers.

- Email the database report to the required team.

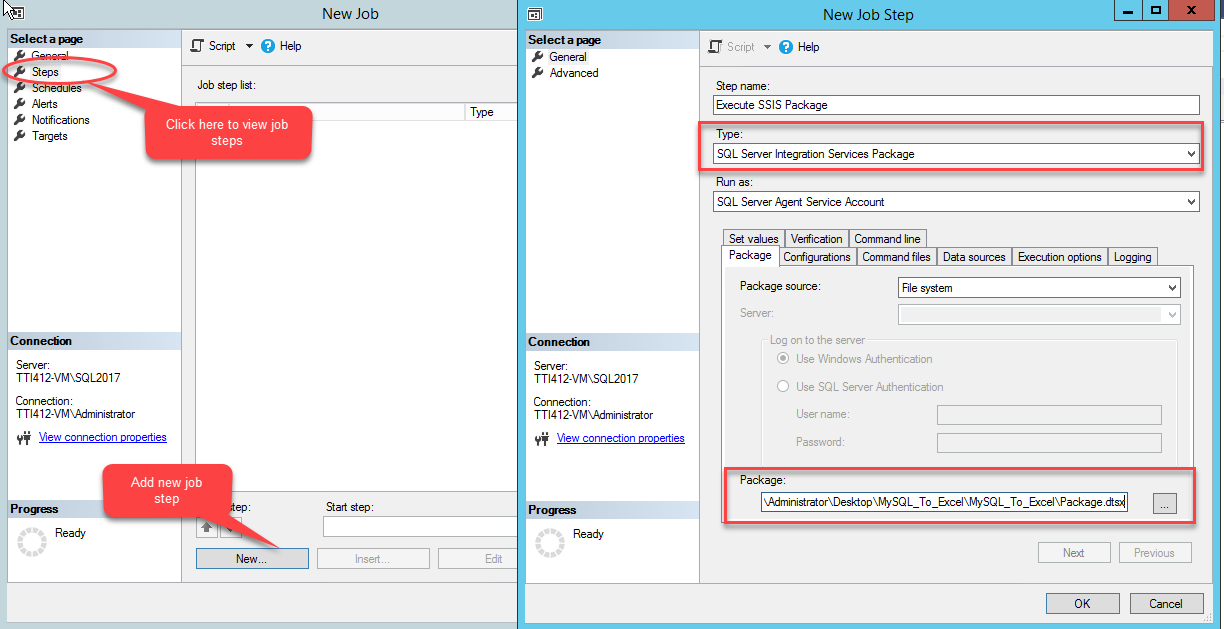

In SQL Job, we need to create two steps. The first step will execute the SSIS package and the second step will execute the procedure to send an email.

To create a SQL Job, Open SSMS >> SQL Server Agent >> Right-click New SQL Job.

Om the New Job wizard, select the Step option and click New. In the New Job Step dialog box, in the Step name text box, provide the desired name, select SQL Server Integration services package from the Type drop-down box. Provide a location of the SSIS package in the Package Text box. See the following image:

Click OK to close New Job Step.

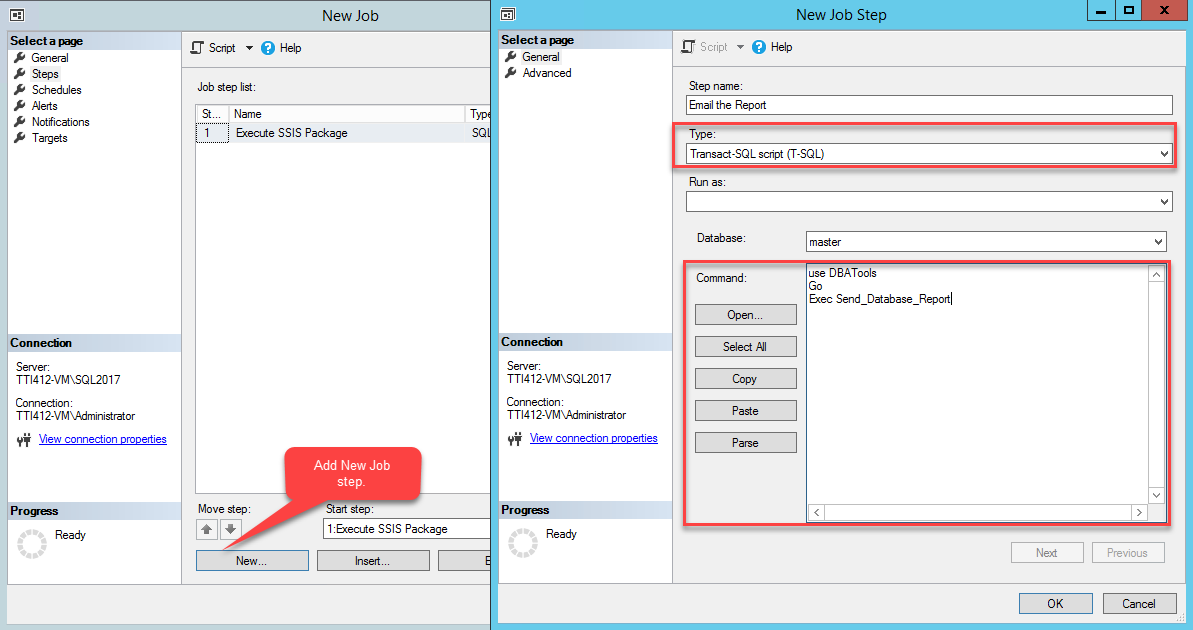

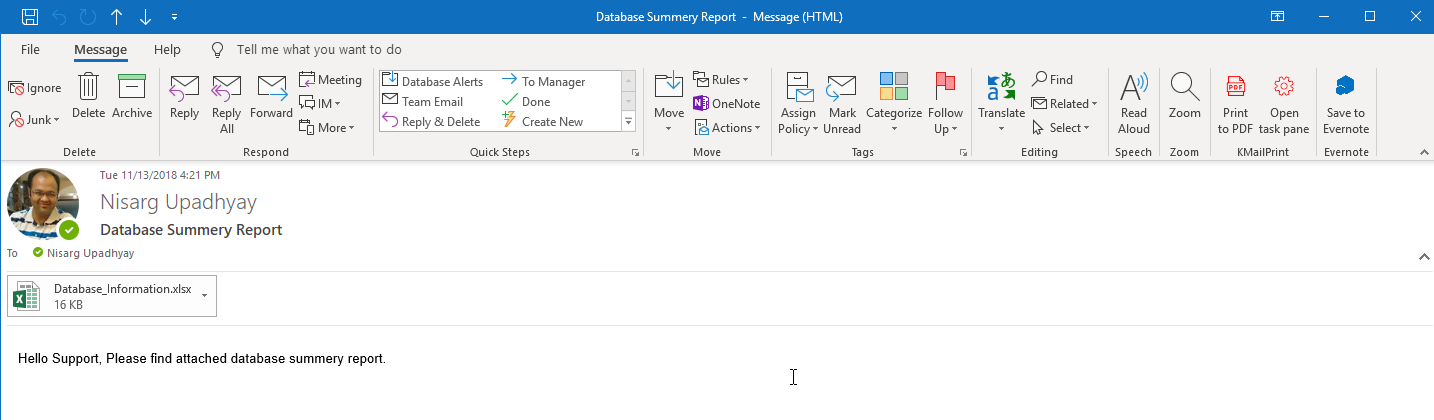

Create another job step which will execute a stored procedure to email the report. It uses a system procedure to send an email. The SSIS package copies the database information on a specific location, hence provide the full path of the excel file in @file_attachments parameter of sp_send_dbmail stored procedure.

To create the stored procedure, execute the following code in the DBATools database of Central Server:

CREATE PROCEDURE Send_database_report AS BEGIN DECLARE @ProfileName VARCHAR(150) SET @ProfileName = (SELECT NAME FROM msdb..sysmail_profile WHERE profile_id = 7) DECLARE @lsMessage NVARCHAR(max) SET @lsMessage = '<p style="font-family:Arial; font-size:10pt"> Hello Support, Please find attached database summery report. ' + '</p>' EXEC msdb.dbo.Sp_send_dbmail @recipients='[email protected]', @body=@lsMessage, @subject='Database Summery Report', @file_attachments= 'C:\Users\Administrator\Desktop\Database_Information.xlsx', @copy_recipients='', @blind_copy_recipients='', @body_format='HTML', @profile_name=@ProfileName END

Once the procedure is created, add a new SQL Job step. Click New. In the New Job Step dialog box, provide a Job Step name, and select Transact-SQL script (T-SQL) from the Type drop-down box. In the Command Text box, write the following code:

USE DBAtools Go EXEC Send_database_report

See the following image:

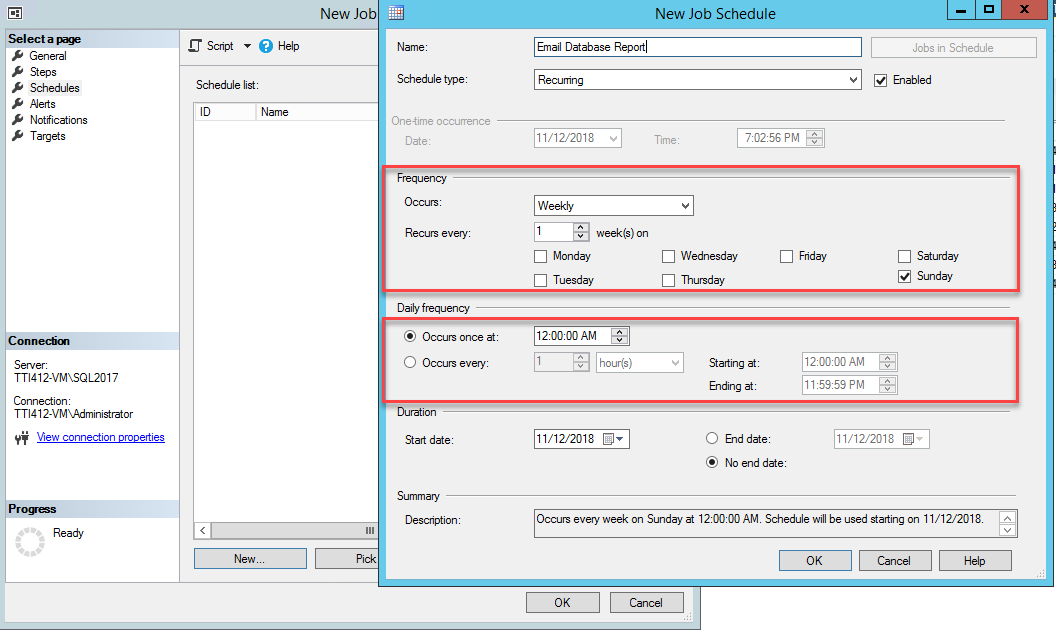

Click OK to close the wizard. Now to configure Job Schedule, select Schedules on the New Job window. Click New to add a schedule.

In the New Job Schedule dialog box, provide the desired name in the Name text box, choose frequency and time. See the following image:

Close OK to close the New Job Schedule and in the New Job window, click on OK to close the dialog box.

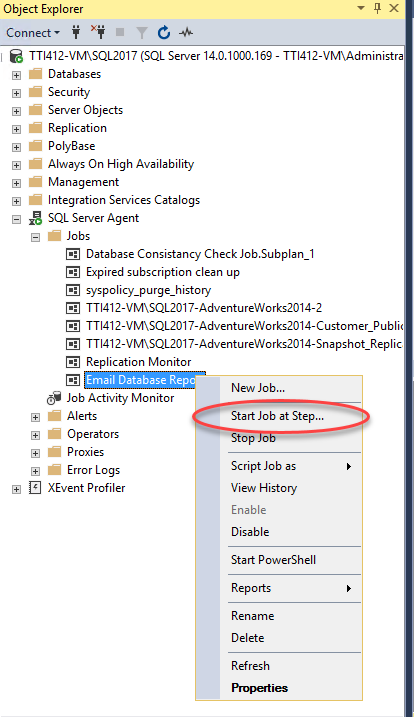

Now, to test the SQL Job, right-click the Email Database Report SQL job and click Start Job at Step.

In the result of successful completion of the SQL Job, you will receive an email with the database report. See the following image:

Summary

In this article I have covered as follows:

- How to populate information of the databases located on remote DB server.

- Create an SSIS package to populate database information and export it to excel file

- Create a multi-step SQL job to generate the report by executing an SSIS package and email the report.