In my previous article, I have briefly covered database statistics, its importance, and why statistics should be updated. Moreover, I have demonstrated a step by step process to create a SQL Server maintenance plan to update statistics. In this article, the following issues will be explained: 1. How to update statistics using T-SQL Command. 2. How to identify the frequently updated tables using T-SQL and also how to update the statistics of tables with frequently inserted/ updated / deleted data .

Updating statistics using T-SQL

You can update statistics using the T-SQL script. If you want to update statistics using T-SQL or SQL Server management studio, you need ALTER database permission on the database. See the T-SQL code example to update the statistics of a specific table:

UPDATE STATISTICS <schema_name>.<table_name>.

Let us consider the example of updating the statistics of the OrderLines table of the WideWorldImporters database. The following script will do that.

UPDATE STATISTICS [Sales].[OrderLines]

If you want to update the statistics of a specific index, you can use the following script:

UPDATE STATISTICS <schema_name>.<table_name> <index_name>

In case you want to update the statistics of the IX_Sales_OrderLines_Perf_20160301_02 index of the OrderLines table, you can execute the following script:

UPDATE STATISTICS [Sales].[OrderLines] [IX_Sales_OrderLines_Perf_20160301_02]

You can also update the statistics of the entire database. If you have a very small database with few tables and little amount of data, then you can update the statistics of all tables within a database. See the following script:

USE wideworldimporters go EXEC Sp_updatestats

Updating statistics for tables with frequently inserted / updated / deleted data

On large databases, scheduling the statistics job becomes complicated, especially when you have only a few hours to perform index maintenance, update statistics, and fulfill other maintenance tasks. By a large database I mean a database that contains thousands of tables and each table contains thousands of rows. For example, we have a database named X. It has hundreds of tables, and each table has millions of rows. And only a few tables get updated frequently. Other tables are rarely changed and have very few transactions performed on them. As I mentioned before, to keep the database performance up to the mark, table statistics must be up to date. So we create an SQL maintenance plan to update the statistics of all tables within the X database. When SQL server updates the statistics of a table, it utilizes a significant amount of resources which can lead to a performance problem. So, it takes a long time to update statistics of hundreds of big tables and while statistics are being updated, the performance of the database reduces significantly. In such circumstances, it is always advisable to update the statistics only for the tables that are updated frequently. You can keep track of changes in data volume or number of rows over the time by using the following dynamic management views: 1. sys.partitions provides information about the total number of rows in a table. 2. sys.dm_db_partition_stats provides information about row counts and page counts, per partition. 3. sys.dm_db_index_physical_stats provides information about the number of rows and pages, plus information about index fragmentation and more. The details about data volume are important, but they do not make the picture of database activity complete. For example, a staging table that has nearly the same number of records can be deleted from the table or inserted in a table every day. Due to that, a snapshot of the number of rows would suggest that the table is static. It might be possible that the records added and deleted have very different values that change the data distribution heavily. In this case, automatically updating statistics in SQL Server makes statistics meaningless. Therefore, tracking the number of modifications to a table is very useful. This can be done in the following ways: 1. rowmodctr column in sys.sysindexes 2. modified_count column in sys.system_internals_partition_columns 3. modification_counter column in sys.dm_db_stats_properties Thus, as I explained earlier, if you have limited time for database maintenance, it is always advisable to update the statistics only for the tables with a higher frequency of data change (insert / update / delete). To do that efficiently, I have created a script that updates the statistics for the “active” tables. The script performs the following tasks: • Declares the required parameters • Creates a temporary table named #tempstatistics to store the table name, schema name, and database name • Creates another table named #tempdatabase to store the database name. First, execute the following script to create two tables:

DECLARE @databasename VARCHAR(500) DECLARE @i INT=0 DECLARE @DBCOunt INT DECLARE @SQLCOmmand NVARCHAR(max) DECLARE @StatsUpdateCOmmand NVARCHAR(max) CREATE TABLE #tempstatistics ( databasename VARCHAR(max), tablename VARCHAR(max), schemaname VARCHAR(max) ) CREATE TABLE #tempdatabases ( databasename VARCHAR(max) ) INSERT INTO #tempdatabases (databasename) SELECT NAME FROM sys.databases WHERE database_id > 4 ORDER BY NAME

Next, write a while loop to create a dynamic SQL query that iterates through all the databases and inserts a list of tables that have a modification counter greater than 200 into the #tempstatistics table. To obtain information about data changes, I use sys.dm_db_stats_properties. Study the following code example:

SET @DBCOunt=(SELECT Count(*) FROM #tempdatabases) WHILE ( @i < @DBCOunt ) BEGIN DECLARE @DBName VARCHAR(max) SET @DBName=(SELECT TOP 1 databasename FROM #tempdatabases) SET @SQLCOmmand= ' use [' + @DBName + ']; select distinct ''' + @DBName+ ''', a.TableName,a.SchemaName from (SELECT obj.name as TableName, b.name as SchemaName,obj.object_id, stat.name, stat.stats_id, last_updated, modification_counter FROM [' + @DBName+ '].sys.objects AS obj inner join ['+ @DBName + '].sys.schemas b on obj.schema_id=b.schema_id INNER JOIN [' + @DBName+ '].sys.stats AS stat ON stat.object_id = obj.object_id CROSS APPLY [' + @DBName+'].sys.dm_db_stats_properties(stat.object_id, stat.stats_id) AS sp WHERE modification_counter > 200 and obj.name not like ''sys%''and b.name not like ''sys%'')a' INSERT INTO #tempstatistics (databasename, tablename, schemaname) EXEC Sp_executesql @SQLCOmmand

Now, create the second loop within the first loop. It will generate a dynamic SQL Query that updates the statistics with full scan. See the code example below:

DECLARE @j INT=0 DECLARE @StatCount INT SET @StatCount =(SELECT Count(*) FROM #tempstatistics) WHILE @J < @StatCount BEGIN DECLARE @DatabaseName_Stats VARCHAR(max) DECLARE @Table_Stats VARCHAR(max) DECLARE @Schema_Stats VARCHAR(max) DECLARE @StatUpdateCommand NVARCHAR(max) SET @DatabaseName_Stats=(SELECT TOP 1 databasename FROM #tempstatistics) SET @Table_Stats=(SELECT TOP 1 tablename FROM #tempstatistics) SET @Schema_Stats=(SELECT TOP 1 schemaname FROM #tempstatistics) SET @StatUpdateCommand='Update Statistics [' + @DatabaseName_Stats + '].[' + @Schema_Stats + '].[' + @Table_Stats + '] with fullscan' EXEC Sp_executesql @StatUpdateCommand SET @j=@j + 1 DELETE FROM #tempstatistics WHERE databasename = @DatabaseName_Stats AND tablename = @Table_Stats AND schemaname = @Schema_Stats END SET @I=@i + 1 DELETE FROM #tempdatabases WHERE databasename = @DBName END

Once the script execution completed, it will drop all temporary tables.

SELECT * FROM #tempstatistics DROP TABLE #tempdatabases DROP TABLE #tempstatistics

The entire script will appear as follows:

--set count on CREATE PROCEDURE Statistics_maintenance AS BEGIN DECLARE @databasename VARCHAR(500) DECLARE @i INT=0 DECLARE @DBCOunt INT DECLARE @SQLCOmmand NVARCHAR(max) DECLARE @StatsUpdateCOmmand NVARCHAR(max) CREATE TABLE #tempstatistics ( databasename VARCHAR(max), tablename VARCHAR(max), schemaname VARCHAR(max) ) CREATE TABLE #tempdatabases ( databasename VARCHAR(max) ) INSERT INTO #tempdatabases (databasename) SELECT NAME FROM sys.databases WHERE database_id > 4 ORDER BY NAME SET @DBCOunt=(SELECT Count(*) FROM #tempdatabases) WHILE ( @i < @DBCOunt ) BEGIN DECLARE @DBName VARCHAR(max) SET @DBName=(SELECT TOP 1 databasename FROM #tempdatabases) SET @SQLCOmmand= ' use [' + @DBName + ']; select distinct ''' + @DBName+ ''', a.TableName,a.SchemaName from (SELECT obj.name as TableName, b.name as SchemaName,obj.object_id, stat.name, stat.stats_id, last_updated, modification_counter FROM [' + @DBName+ '].sys.objects AS obj inner join ['+ @DBName + '].sys.schemas b on obj.schema_id=b.schema_id INNER JOIN [' + @DBName+ '].sys.stats AS stat ON stat.object_id = obj.object_id CROSS APPLY [' + @DBName+'].sys.dm_db_stats_properties(stat.object_id, stat.stats_id) AS sp WHERE modification_counter > 200 and obj.name not like ''sys%''and b.name not like ''sys%'')a' INSERT INTO #tempstatistics (databasename, tablename, schemaname) EXEC Sp_executesql @SQLCOmmand DECLARE @j INT=0 DECLARE @StatCount INT SET @StatCount =(SELECT Count(*) FROM #tempstatistics) WHILE @J < @StatCount BEGIN DECLARE @DatabaseName_Stats VARCHAR(max) DECLARE @Table_Stats VARCHAR(max) DECLARE @Schema_Stats VARCHAR(max) DECLARE @StatUpdateCommand NVARCHAR(max) SET @DatabaseName_Stats=(SELECT TOP 1 databasename FROM #tempstatistics) SET @Table_Stats=(SELECT TOP 1 tablename FROM #tempstatistics) SET @Schema_Stats=(SELECT TOP 1 schemaname FROM #tempstatistics) SET @StatUpdateCommand='Update Statistics [' + @DatabaseName_Stats + '].[' + @Schema_Stats + '].[' + @Table_Stats + '] with fullscan' EXEC Sp_executesql @StatUpdateCommand SET @j=@j + 1 DELETE FROM #tempstatistics WHERE databasename = @DatabaseName_Stats AND tablename = @Table_Stats AND schemaname = @Schema_Stats END SET @I=@i + 1 DELETE FROM #tempdatabases WHERE databasename = @DBName END SELECT * FROM #tempstatistics DROP TABLE #tempdatabases DROP TABLE #tempstatistics END

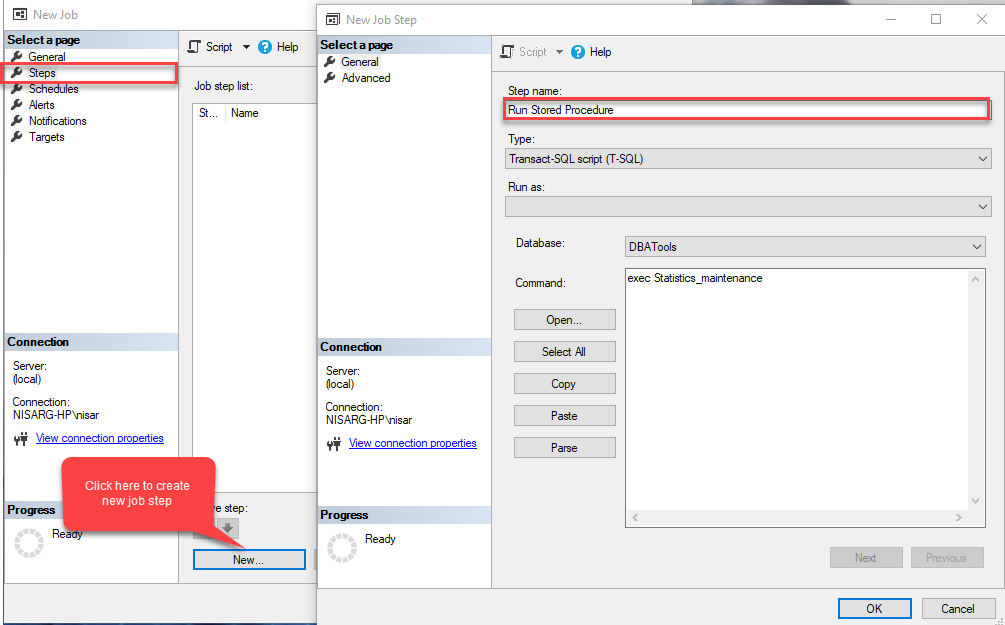

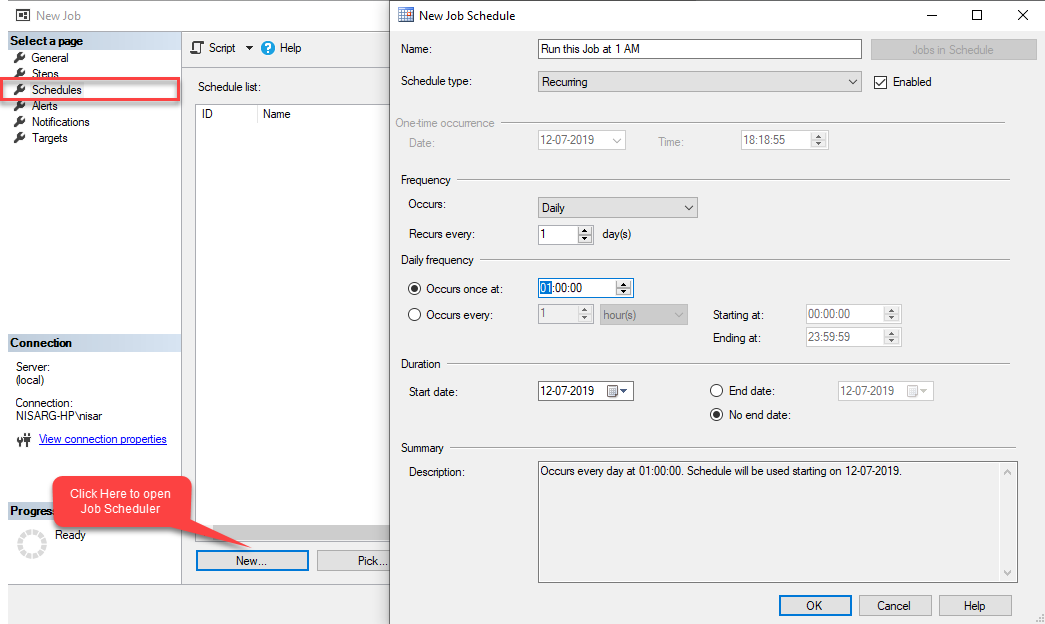

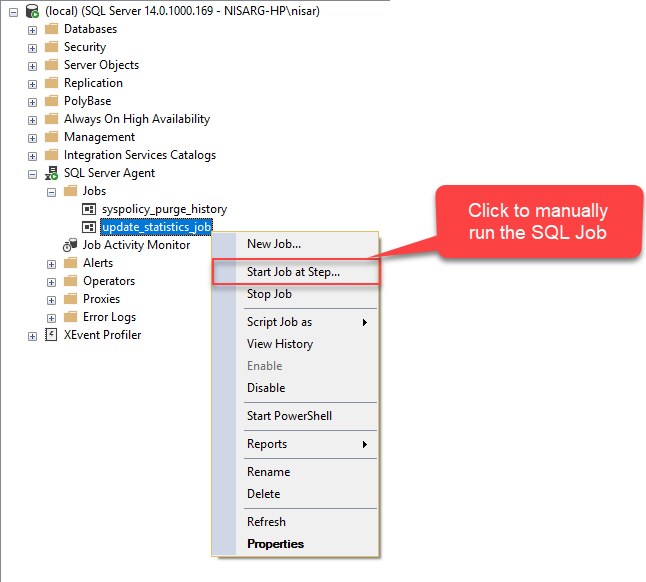

You can also automate this script by creating an SQL Server Agent job which will execute it at a scheduled time. A step by step instruction of automating this job is given below.

Creating an SQL Job

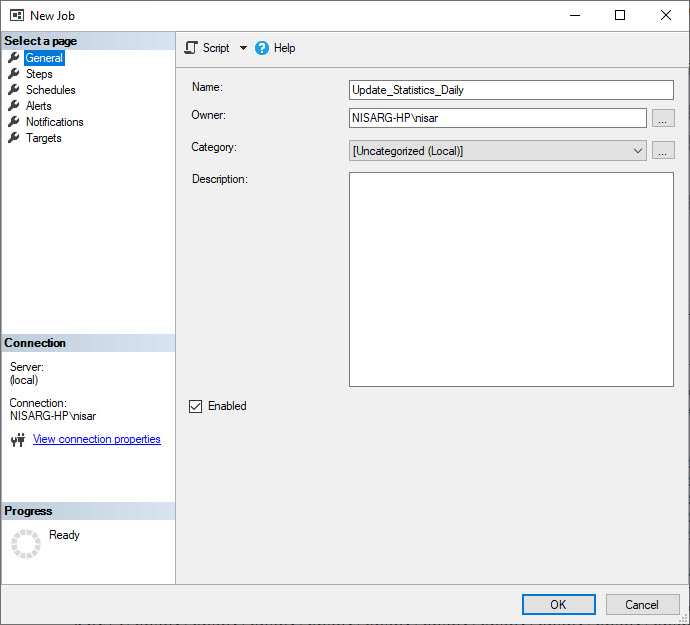

First, let’s create an SQL Job to automate the process. To do that, open SSMS, connect to the desired server and expand SQL Server Agent, right-click on Jobs and select New Job.

EXEC Statistics_maintenance

Summary

In this article, the following issues have been covered: 1. How to update the statistics of tables using T-SQL Script. 2. How to obtain information about changes in data volume and frequency of data changes. 3. How to create the script that updates statistics on active tables. 4. How to create an SQL Server Agent Job to execute the script at the scheduled time.

Tags: sql server, t-sql, update statistics Last modified: September 20, 2021