We started talking about SQL Server Transactional Replication issues earlier. Now, we are going to continue with a few more hands-on demos to understand frequently faced Replication performance issues and how to troubleshoot them correctly.

We have already discussed such problems as Configuration issues, Permission issues, Connectivity issues, and Data Integrity issues along with troubleshooting and fixing them. Now, we are going to focus on various Performance issues and Corruption issues impacting SQL Server Replication.

Since Corruption issues are a huge topic, we will discuss how their impacts in this article only and won’t go into detail. I’ve picked several scenarios that can fall under Performance and Corruption issues based upon my experience:

- Performance Issues

- Long-running Active Transactions in Publisher database

- Bulk INSERT/UPDATE/DELETE operations on Articles

- Huge data changes within a single Transaction

- Blockings in the distribution database

- Corruptions related issues

- Publisher Database Corruptions

- Distribution Database Corruptions

- Subscriber Database Corruptions

- MSDB Database Corruptions

Performance Issues

SQL Server Transactional Replication is a complicated architecture that involves several parameters like the Publisher database, the Distributor (distribution) database, the Subscriber database, and several Replication Agents executing as SQL Server Agent jobs.

As we have discussed all these items in detail across our previous articles, we know the importance of each one to the Replication functionality. Anything impacting these components can affect the SQL Server Replication performance.

For example, the Publisher database instance is holding a critical database with lots of transactions per second. However, the Server resources have a bottleneck like the consistent CPU usage above 90% or Memory usage above 90%. It will definitely have an impact on the Log Reader Agent Job performance which reads the change data from Transactional logs of the Publisher database.

Similarly, any such scenarios across Distributor or Subscriber database instances can impact Snapshot Agent or Distribution Agent. So, as a DBA, you need to ensure that the server resources like CPU, Physical Memory, and Network bandwidth are efficiently configured for the Publisher, Distributor, and Subscriber database instances.

Assuming that the Publisher, Subscriber, and Distributor database servers are configured correctly, we can still have the Replication performance issues when encountering the below scenarios.

Long-running Active Transactions in Publisher Database

As the name indicates, Long-Running Active transactions show that there is an Application call or a user operation within the transaction scope executing for a long time.

Finding a Long-Running Active Transaction means that the transaction is not yet committed and can be either rolled back or committed by the application. This will prevent the Transaction Log from being truncated, resulting in the Transaction Log file size increasing continuously.

Log Reader Agent scans for all Committed records that are marked for replication from Transactional Logs in a serialized order based on the Log Sequence Number (LSN), skipping all other changes happening for articles that aren’t replicated. If the Long-Running Active transaction commands aren’t yet committed, then Replication will skip sending those commands and send all other committed transactions to distribution database. Once the Long-Running Active transaction is committed, records will be sent to distribution database and till that time the inactive portion of the Transaction Log file of Publisher DB will not be cleared thereby causing the Transaction Log File of Publisher database size to increase.

We can test the Long-Running Active Transaction scenario by performing the below steps:

By default, Distribution Agent cleans up all committed changes to the Subscriber database, retaining the last record to monitor the new changes based upon Log Sequence Number (LSN).

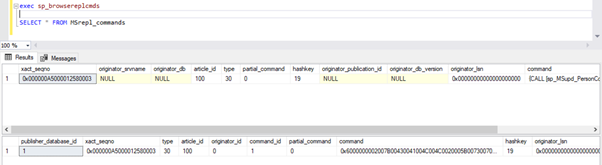

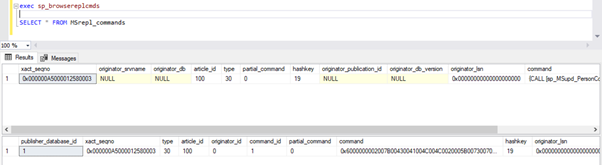

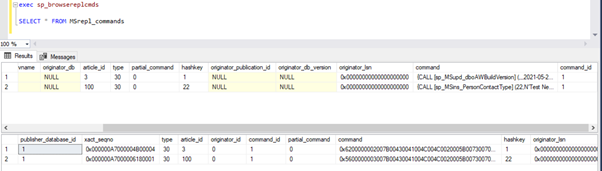

We can execute the below queries to check the status of the records available in MSRepl_Commands tables or using the sp_browsereplcmds procedure in the distribution database:

exec sp_browsereplcmds

GO

SELECT * FROM MSrepl_commands

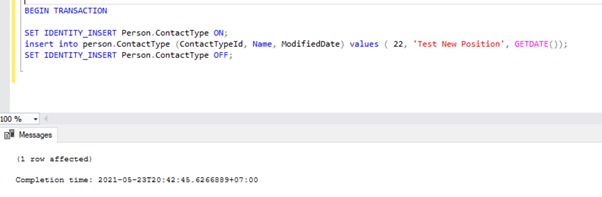

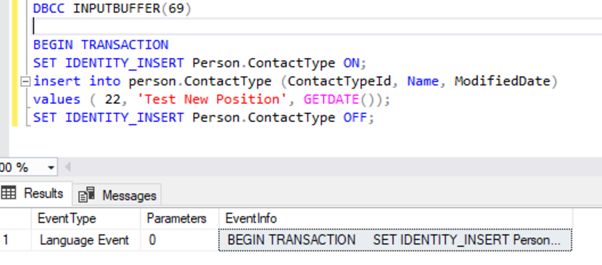

Now, open a new query window and execute the below script to create a long-running active transaction on the AdventureWorks database. Notice that the below script does not include any ROLLBACK or COMMIT TRANSACTION commands. Hence, we advise not to run these kinds of commands on the Production database.

BEGIN TRANSACTION

SET IDENTITY_INSERT Person.ContactType ON;

insert into person.ContactType (ContactTypeId, Name, ModifiedDate) values ( 22, 'Test New Position', GETDATE());

SET IDENTITY_INSERT Person.ContactType OFF;

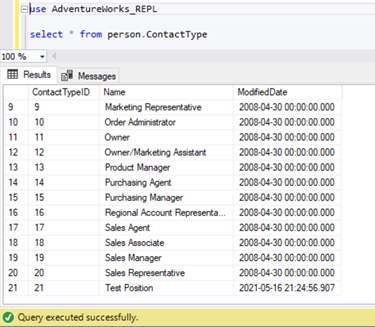

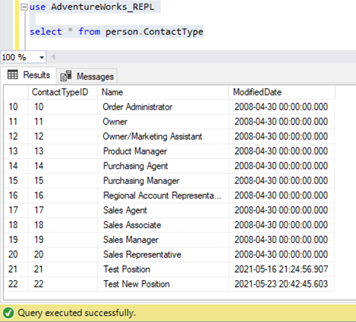

We can verify that this new record hasn’t been replicated to the Subscriber database. For that, we’ll perform the SELECT statement on the Person.ContactType table in the Subscriber database:

Let’s verify whether the above INSERT command was read by Log Reader Agent and written into the Distribution database.

Execute the scripts from the part of Step 1 again. Results still show the same old status, confirming that the record wasn’t read from the Transaction logs of the Publisher database.

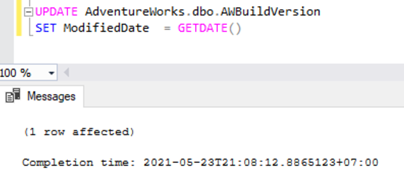

Now open a New Query window and execute the below UPDATE script to see whether the Log Reader Agent was able to skip the long-running active transaction and read the changes done by this UPDATE statement.

UPDATE AdventureWorks.dbo.AWBuildVersion

SET ModifiedDate = GETDATE()

Check the Distribution database whether the Log Reader Agent could capture this change. Run the script as part of Step 1:

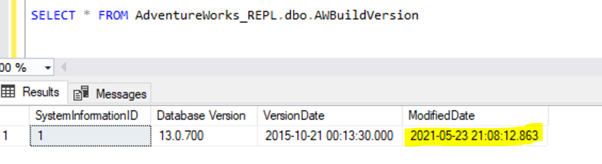

Since the above UPDATE statement was committed in the Publisher database, Log Reader Agent could scan this change and insert it into the Distribution database. Subsequently, it applied this change to the Subscriber database as shown below:

INSERT on Person.ContactType will be replicated to the Subscriber database only after the INSERT transaction is committed in the Publisher database. Before we commit, we can quickly check how to identify a Long-Running Active transaction, understand it, and handle it efficiently.

Identify a Long-Running Active Transaction

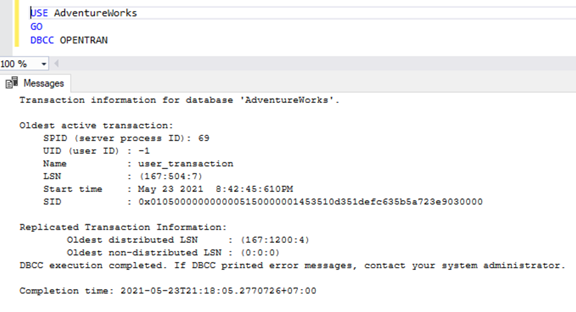

To check for any Long-Running Active Transactions on any database, open up a new Query Window and connect to the respective database that we need to check. Execute the DBCC OPENTRAN console command – it is a Database Console Command to view the transactions open in the database at the time of execution.

USE AdventureWorks

GO

DBCC OPENTRAN

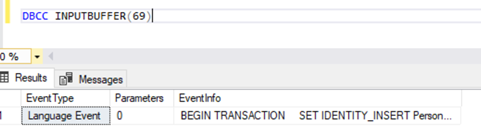

Now we know that there was a SPID (server process ID) 69 running for a long time. Let’s verify which command was executed on that transaction using the DBCC INPUTBUFFER console command (a Database Console Command used to identify the command or operation that is happening on the selected Server process ID).

For readability, I am copying the EventInfo field value and formatting it to show the command we have executed earlier.

If there aren’t any Long-Running Active transactions on the selected database, we’ll get the below message:

Similar to the DBCC OPENTRAN console command, we can SELECT from DMV named sys.dm_tran_database_transactions to get more detailed results (refer to the MSDN article for more data).

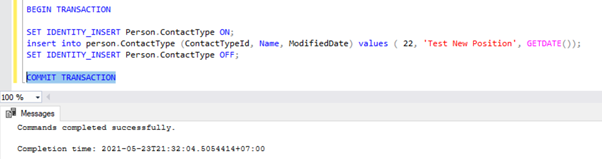

Now, we know how to identify the Long-running transaction. We can commit the transaction and see how the INSERT statement gets replicated.

Go to the window where we have inserted the record to the Person.ContactType table within the Transaction Scope and execute COMMIT TRANSACTION as shown below:

Execution of COMMIT TRANSACTION committed the record into the Publisher database. Hence, it should be visible in the Distribution database and the Subscriber database:

If you have noticed, the older records from the Distribution database were cleaned up by the Distribution Agent Clean-up job. The new record for INSERT on Person.ContactType table was visible in the MSRepl_cmds table.

From our testing, we have learned the following things:

- The Log Reader Agent job of SQL Server Transactional Replication will scan for Committed records only from the Transactional Logs of Publisher database and INSERT into the Subscriber database.

- Order of changed data on the Publisher database sent to the Subscriber will be based on the Committed status and time on the Publisher database even though the replicated data will have the same time as of Publisher database.

- Identifying Long-Running Active Transactions can help in resolving Transactional Log File growth of Publisher or Distributor or Subscriber or any databases.

Bulk SQL INSERT/UPDATE/DELETE Operations on Articles

With huge data residing in the Publisher database, we often end up with requirements to INSERT or UPDATE or DELETE huge records to Replicated tables.

If the INSERT, or UPDATE, or DELETE operations are performed in a single Transaction, it will definitely end up in the Replication stuck for a long time.

Let’s say we need to INSERT 10 Million records into a replicated table. Inserting those records in a single shot will cause Performance issues.

INSERT INTO REplicated_table

SELECT * FROM Source_table

Instead, we can INSERT records in batches of 0.1 or 0.5 Million records in a WHILE loop or CURSOR loop, and it will ensure faster replication. We might not receive major issues for INSERT statements unless otherwise the table involved has lots of indexes. However, this will have a huge performance hit for the UPDATE or DELETE statements.

Assume we have added a new column to the Replicated table that has around 10 Million records. We want to update this new column with a default value.

Ideally, the below command will work fine to UPDATE all 10 Million records with default value as Abc:

-- UPDATE 10 Million records on Replicated Table with some DEFAULT values

UPDATE Replicated_table

SET new_column = 'Abc'

However, to avoid impacts on Replication, we should execute the above UPDATE operation in batches of 0.1 or 0.5 Million records to avoid performance issues.

-- UPDATE in batches to avoid performance impacts on Replication

WHILE 1 = 1

BEGIN

UPDATE TOP(100000) Replicated_Table

SET new_Column = 'Abc'

WHERE new_column is NULL

IF @@ROWCOUNT = 0

BREAK

END

Similarly, if we need to DELETE around 10 Million records from a Replicated table, we can do it in batches:

-- DELETE 10 Million records on Replicated Table with some DEFAULT values

DELETE FROM Replicated_table

-- UPDATE in batches to avoid performance impacts on Replication

WHILE 1 = 1

BEGIN

DELETE TOP(100000) Replicated_Table

IF @@ROWCOUNT = 0

BREAK

END

Handling BULK INSERT, or UPDATE, or DELETE efficiently can help resolve the Replication issues.

Pro Tip: To INSERT huge data into a Replicated table in Publisher database, use the IMPORT/EXPORT wizard in SSMS, as it will insert records in batches of 10000 or based upon the record size faster calculated by SQL Server.

Huge Data Changes within Single Transaction

To maintain data integrity from the application or development perspective, many applications have Explicit transactions defined for critical operations. However, if a lot of operations (INSERT, UPDATE, or DELETE) perform within a single Transaction scope, the Log Reader Agent will first wait for the transaction to complete, as we have seen earlier.

Once the transaction gets committed by the application, the Log Reader Agent needs to scan those huge data changes performed on the Publisher database transaction logs. During that scan, we can see the warnings or informational messages in the Log Reader Agent like

The Log Reader Agent is scanning the transaction log for commands to be replicated. Approximately xxxxxx log records have been scanned in pass # xxxx of which were marked for replication, elapsed time xxxxxxxxx (ms)

Before identifying the solution for this scenario, we need to understand how the Log Reader Agent scans records from the Transactional Logs and inserts records into the Distribution database MSrepl_transactions and MSrepl_cmds tables.

SQL Server internally has a Log Sequence Number (LSN) inside the Transactional Logs. The Log Reader Agent makes use of the LSN values to scan changes marked for SQL Server Replication in order.

Log Reader Agent executes the sp_replcmds extended stored procedure to fetch the commands marked for Replication from the Transactional Logs of Publisher database.

Sp_replcmds accepts an input parameter named @maxtrans to fetch the maximum number of transactions. The default value would be 1 meaning it will scan whatever number of transactions available from logs to be sent to the distribution database. If there are 10 INSERT operations performed via a single Transaction and committed in the Publisher database, a single batch might contain 1 Transaction with 10 commands.

If many transactions with lesser commands are identified, the Log Reader Agent will combine multiple transactions or the XACT sequence number to a single Replication Batch. But it stores as a different XACT Sequence number in the MSRepl_transactions table. Individual commands belonging to that transaction will be captured in the MSRepl_commands table.

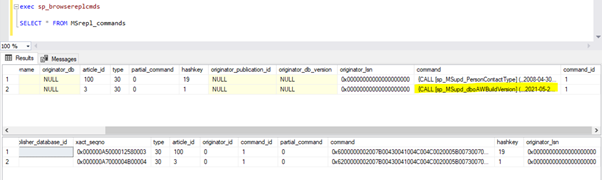

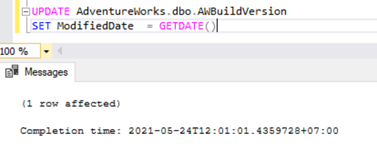

To verify the things we have discussed above, I’m updating the ModifiedDate column of the dbo.AWBuildVersion table to today’s date and see what happens:

UPDATE AdventureWorks.dbo.AWBuildVersion

SET ModifiedDate = GETDATE()

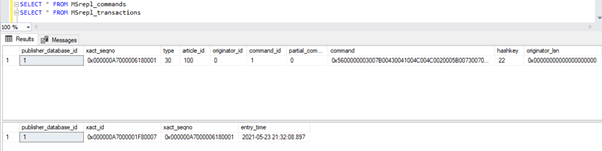

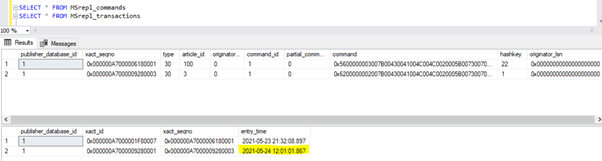

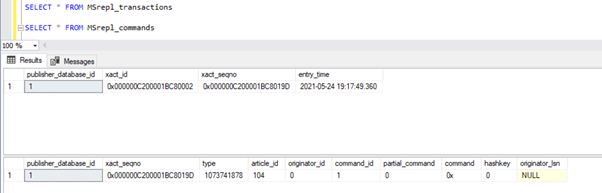

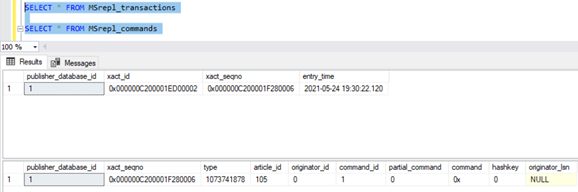

Before executing the UPDATE, we verify the records present in the MSrepl_commands and MSrepl_transactions tables:

Now, execute the above UPDATE script and verify the records present in those 2 tables:

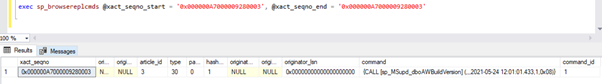

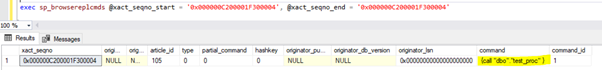

A new record with the UPDATE time was inserted into the MSrepl_transactions table with the nearby entry_time. Checking the command on this xact_seqno will show the list of logically grouped commands using the sp_browsereplcmds procedure.

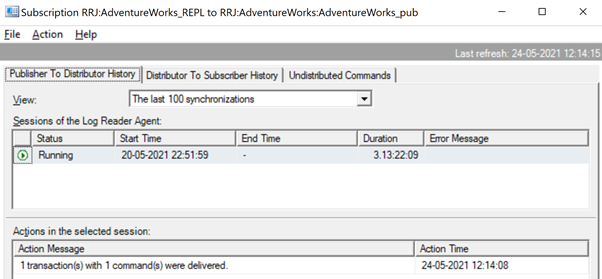

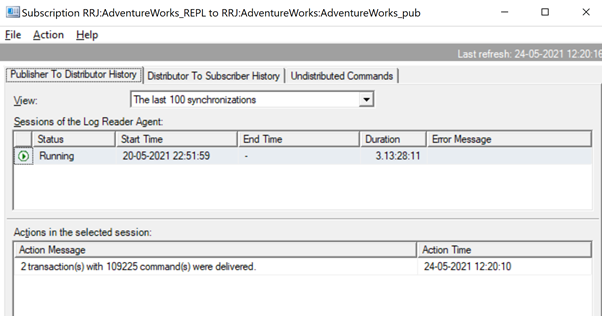

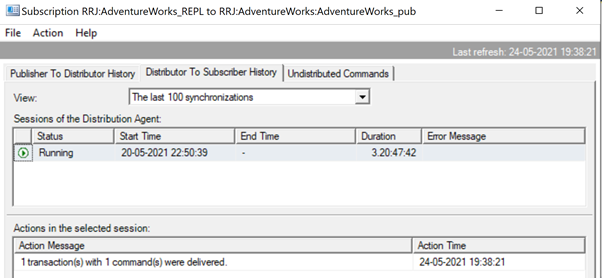

In the Replication Monitor, we can see a single UPDATE statement captured under 1 Transaction(s) with 1 command(s) from the Publisher to the Distributor.

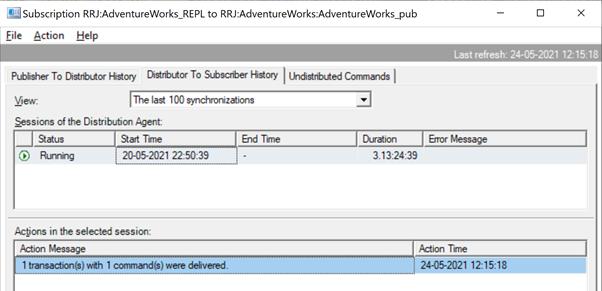

And we can see the same command getting delivered from the Distributor to Subscriber in a fraction of a second’s difference. It indicates that the Replication is happening properly.

Now, if there is a huge number of transactions combined in a single xact_seqno, we can see messages like 10 transaction(s) with 5000 command(s) were delivered.

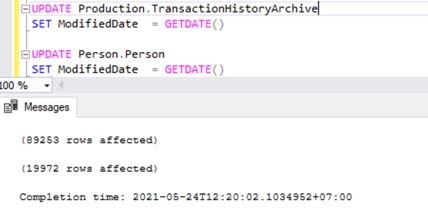

Let’s check this by executing UPDATE on 2 different tables at the same time:

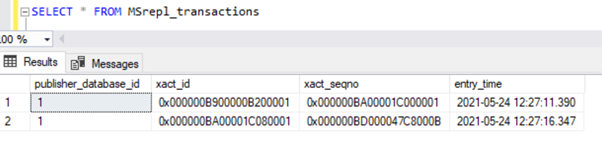

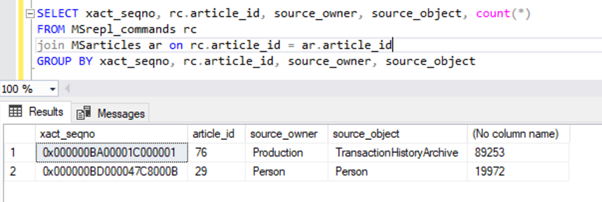

We can see two transaction records in the MSrepl_transactions table matching the two UPDATE operations and then the no. of records in that table matching the no. of records updated.

The result from the MSrepl_transactions table:

The result from the MSrepl_commands table:

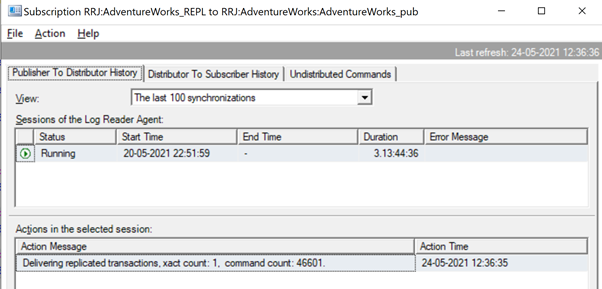

However, we have noticed that these 2 transactions are logically grouped by the Log Reader Agent and combined in a single batch as 2 transactions with 109225 commands.

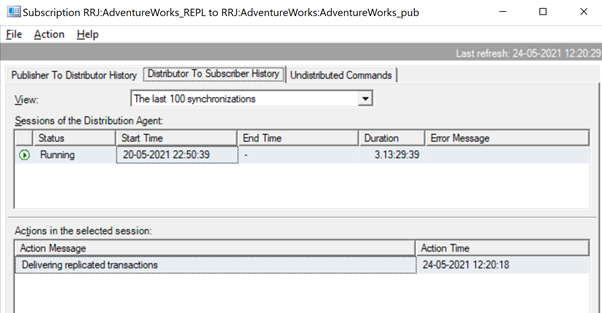

But before that, we might see messages like Delivering Replicated transactions, xact count: 1, command count 46601.

This will happen till the Log Reader Agent scans the complete set of changes and identifies that 2 UPDATE transactions were fully read from the Transactional Logs.

Once the commands are fully read from the Transactional Logs, we see that 2 transactions with 109225 commands were delivered by the Log Reader agent:

Since the Distribution agent is waiting for a huge transaction to get replicated, we might see a message like Delivering Replicated transactions indicating that there was a huge transaction getting replicated, and we need to wait for it to get replicated completely.

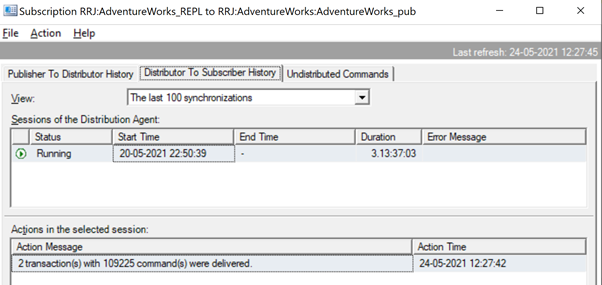

Once replicated, we can see the below message in the Distribution Agent as well:

Several ways are helpful to resolve these problems.

Way 1: CREATE New SQL Stored Procedure

You need to create a new stored procedure and encapsulate the application logic into it under the scope of Transaction.

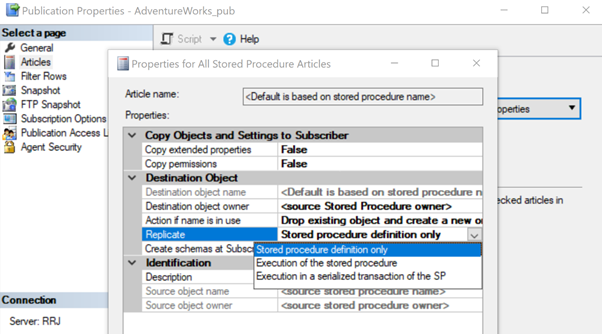

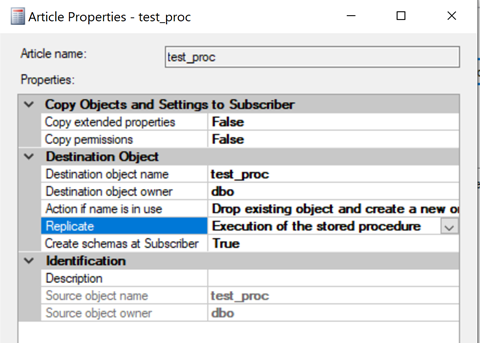

Once it is created, add that Stored Procedure article to Replication and change the article property Replicate to the Execution of the Stored Procedure option.

It will help execute the Stored Procedure article on the Subscriber instead of replicating all individual data changes that were happening.

Let’s review how the Execution of the Stored Procedure option for Replicate reduces the load on Replication. To do that, we can create a test Stored Procedure as shown below:

CREATE procedure test_proc

AS

BEGIN

UPDATE AdventureWorks.dbo.AWBuildVersion

SET ModifiedDate = GETDATE()

UPDATE TOP(10) Production.TransactionHistoryArchive

SET ModifiedDate = GETDATE()

UPDATE TOP(10) Person.Person

SET ModifiedDate = GETDATE()

END

The above procedure will UPDATE a single record on the AWBuildVersion table and 10 records each on the Production.TransactionHistoryArchive and Person.Person tables totaling up to 21 record changes.

After creating this new procedure across both the Publisher and Subscriber, add it to Replication. For that, right-click on Publication and choose the procedure article to Replication with the default Stored Procedure definition only option.

Once done, we can verify the records available in the MSrepl_transactions and MSrepl_commands tables.

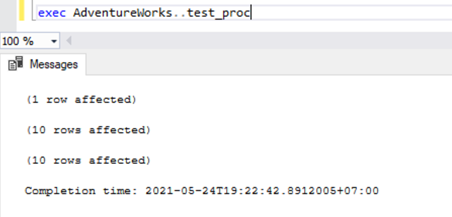

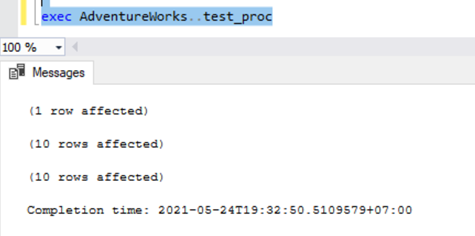

Now, let’s execute the procedure in the Publisher database to see how many records are tracked out.

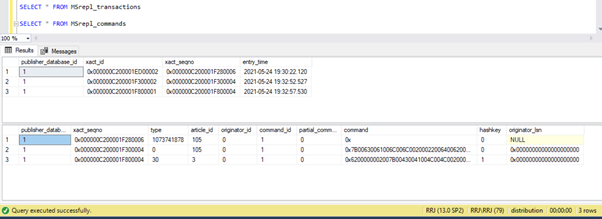

We can see the following on the Distribution tables MSrepl_transactions and MSrepl_commands:

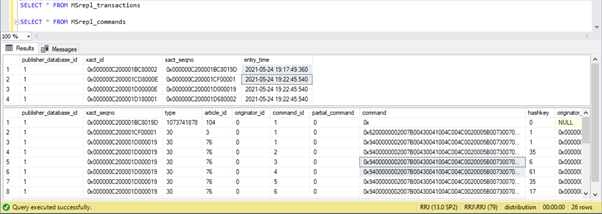

Three xact_seqno were created for three UPDATE operations in the MSrepl_transactions table, and 21 commands got inserted into the MSrepl_commands table.

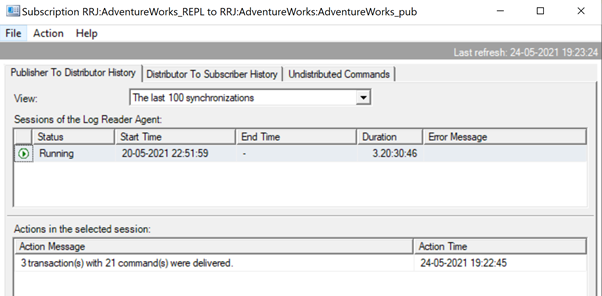

Open up Replication Monitor and see whether they are sent as 3 different Replication batches or a single batch with 3 transactions together.

We can see that three xact_seqno got consolidated as a single Replication batch. Hence, we can see that 3 transactions with 21 commands were delivered successfully.

Let’s remove the procedure from Replication and add it back with the second Execution of the Stored Procedure option. Now, execute the procedure and see how the records are getting replicated.

Checking records from Distribution tables shows the below details:

Now, execute the procedure on the Publisher database and see how many records are getting logged in Distribution tables. Execution of a procedure updated 21 records (1 record, 10 records, and 10 records) as earlier.

Verifying Distribution tables shows the below data:

Let’s take a quick look at sp_browsereplcmds to see the actual command received:

The command is “{call “dbo”.”test_proc” }” which will get executed on the Subscriber database.

In the Replication Monitor, we can see that only 1 transaction(s) with 1 command(s) was delivered via Replication:

In our test case, we have used a procedure with only 21 data changes. However, if we do that for a complicated procedure involving millions of changes, then the Stored Procedure approach with the Execution of the Stored Procedure option will be efficient in reducing the Replication load.

We need to validate this approach by checking whether the procedure has the logic to update only the same set of records in the Publisher and Subscriber databases. Otherwise, this will create data inconsistency issues across the Publisher and Subscriber.

Way 2: Configuring MaxCmdsInTran, ReadBatchSize, and ReadBatchThreshold Log Reader Agent Parameters

MaxCmdsInTran – indicates the maximum number of Commands that can be logically grouped within a Transaction while reading data from the Transactional Logs of Publisher database and written to the Distribution database.

In our earlier tests, we noticed that around 109225 commands got accumulated in a single Replication exact sequence, resulting in slight slowness or latency. If we set the MaxCmdsInTran parameter to 10000, the single xact sequence number will be split into 11 xact sequences resulting in faster delivery of commands from Publisher to Distributor. Even though this option helps to reduce the contention of the Distribution database and replicate the data faster from the Publisher to the Subscriber database, be careful while using this option. It might end up delivering the data to the Subscriber database and accessing it from the Subscriber database tables before the end of the original transaction scope.

ReadBatchSize – This parameter might not be helpful for a single huge transaction scenario. However, it helps when there are lots and lots of smaller transactions happening on the Publisher database.

If the number of commands per transaction is fewer, the Log Reader Agent will combine multiple changes to a single Replication command transaction scope. The Read Batch size indicates how many Transactions can be read in the Transaction Log before sending changes to the Distribution database. The values can be between 500 and 10000.

ReadBatchThreshold – indicates the number of commands to be read from the transactional log of Publisher database before being sent to the Subscriber with a default value of 0 to scan the complete log file. However, we can reduce this value to send data faster by limiting it to 10000 or 100000 commands like that.

Way 3: Configuring the Best Values for SubscriptionStreams Parameter

SubscriptionStreams – indicates the number of connections that a Distribution agent can execute in parallel to fetch data from the Distribution database and propagate it to the Subscriber database. The default value is 1 suggesting only one stream or connection from the distribution to the subscriber database. Values can be any between 1 to 64. If more Subscription streams are added, it might end up on CXPACKET congestion (in other words, parallelism). Hence, you should take care while configuring this option in Production.

To summarize, try avoiding huge INSERT, UPDATE, or DELETE in a single transaction. If it is impossible to eliminate these operations, the best option would be testing the above ways and picking the one that suits your specific conditions best of all.

Blockings in Distribution Database

The distribution database is the heart of the SQL Server Transactional Replication and if it is not maintained properly, there will be lots of performance issues.

To summarize all recommended practices for the distribution database configuration, we need to ensure the below configurations are done properly:

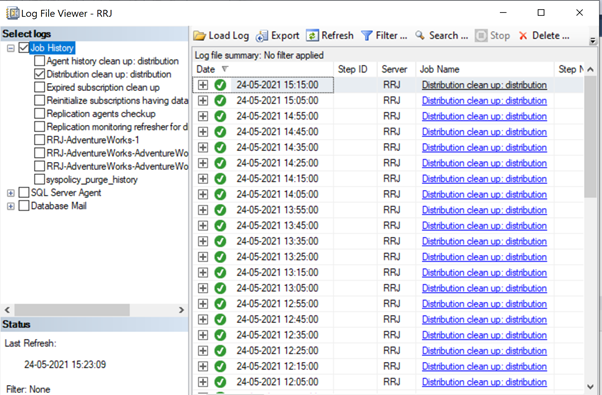

- Data files of the distribution databases should be placed on high IOPS drives. If the Publisher database will have lots of data changes, we need to ensure that the distribution database is placed on a drive with high IOPS. It will be continually receiving data from the Log Reader agent, sending data to the Subscriber database via the Distribution agent. All replicated data will get purged from the distribution database every 10 minutes via the Distribution clean-up job.

- Configure the Initial File size and Autogrowth properties of the Distribution database with the recommended values based upon the Publisher database activity levels. Otherwise, it will lead to data and log files fragmentation causing performance issues.

- Include distribution databases in the regular Index Maintenance jobs configured on the Servers where the distribution database is located.

- Include distribution databases in the Full backup jobs schedule to troubleshoot any specific issues.

- Ensure that the Distribution Clean-up: distribution job is running every 10 minutes as per the default schedule. Otherwise, the size of the distribution database keeps increasing and leads to performance issues.

As we have noticed so far, in the distribution database, the key tables involved are MSrepl_transactions and MSrepl_commands. The records are inserted there by the Log Reader Agent job, selected by the Distribution agent job, applied at the Subscriber database, and then deleted or cleaned up by the Distribution Clean-up agent job.

If the distribution database isn’t configured properly, we can encounter session blockings across these 2 tables, which will result in SQL Server Replication performance issues.

We can execute the below query across any database to view the blocking sessions available in the current instance of SQL Server:

SELECT *

FROM sys.sysprocesses

where blocked > 0

order by waittime desc

If the above query returns any results, we can identify commands on those blocked sessions by executing the DBCC INPUTBUFFER(spid) console command and take actions accordingly.

Corruption-related Issues

An SQL Server database uses its algorithm or logic to store data into tables and keep it in extents or pages. Database Corruption is a process by which the physical state of the database-related files/extents/pages changes from normal to unstable or non-retrieval state making the data retrieval harder or impossible.

All SQL Server Databases are prone to Database Corruptions. The causes can be:

- Hardware failures like Disk, Storage, or Controller issues;

- Server OS failures like patching issues;

- Power failures resulting in an abrupt shutdown of Servers or improper shutdown of database.

If we can recover or repair databases without any data loss, the SQL Server Replication won’t be impacted. However, if there are any data losses while recovering or repairing corrupt databases, we will start receiving a lot of Data integrity issues we have discussed in our earlier article.

Corruptions can happen at various components, such as:

- Publisher Data/Logfile corruptions

- Subscriber Data/Logfile corruptions

- Distribution Database Data/Log File corruptions

- Msdb Database Data/Log File corruptions

If we receive a lot of data integrity issues after fixing up Corruption issues, it is recommended to remove the Replication completely, fix all Corruption issues in the Publisher, Subscriber, or Distributor database and then reconfigure Replication to fix it. Otherwise, data integrity issues will persist and lead to data inconsistency across the Publisher and Subscriber. The time required to fix the Data integrity issues in case of Corrupted databases will be much more compared to configuring Replication from scratch. Hence identify the level of Corruption encountered and take optimal decisions to resolve the Replication issues faster.

Wondering why msdb database corruption can harm Replication? Since msdb database hold all details related to SQL Server Agent Jobs including Replication Agent jobs, any corruption on msdb database will harm Replication. To recover quickly from msdb database corruptions, it is recommended to restore msdb database from the last Full Backup of msdb database. This also signifies the importance of taking Full Backups of all system databases including msdb database.

Conclusion

Thanks for successfully going through the final power-packed article about the Performance issues in the SQL Server Transactional Replication. If you have gone through all articles carefully, you should be able to troubleshoot almost any Transactional Replication-based issues and fix them out efficiently.

If you need any further guidance or have any Transactional Replication-related issues in your environment, you can reach out to me for consultation. And if I missed anything essential in this article, you are welcome to point to that in the Comments section.

Tags: performance, transactional replication Last modified: September 16, 2021